Clinical Trial Performance Metrics: The Core KPIs

by bmzinck | Oct 7, 2021 | Blog

Clinical Operations as a distinct corporate function and department has evolved into a standard corporate model at sponsor and CRO companies, with all the trappings: Titles (e.g. Director of ClinOps), standard roles, its own language, a bevy of software applications, and dedicated trade events. As with any corporate function, it needs a set of metrics to provide a way to measure performance. And while the adoption of clinical trial performance metrics — KPIs — has been uneven, ClinOps professionals today tend to identify a common set of indicators.

The Need for Metrics Isn’t New

In Applied Clinical Trials, way back in 2016, the issue of clinical study KPIs was already coming to focus. In an article titled “ Finally, Standardized KPIs are Front and Center ,” the author Kenneth A. Getz identified the following as some of the most important things that should be measured in a critical trial:

- Proportion of final databases locked on time

- Mean number of protocol amendments post-protocol approval

- Proportion of studies completing patient enrollment on time

- Mean number of protocol deviations per study volunteer

- Proportion of studies with investigative sites activated on time

- Mean number of times databases are unlocked per study

- Proportion of vendors with critical findings following an audit

That list is a fine start, but they are a little narrowly focused on technical study factors — rather than operational team factors — within each study.

ClinOps leaders at sponsors and CROS need measures that measure their organization , not just the studies they are responsible for. That may start with a simple Red/Yellow/Green indicator for each study, but a larger set of indicators have the potential to signal trends and issues across studies.

A List of Clinical Operations Metrics

Based on a completely unscientific survey of ClinOps leaders working with Agatha, I have aggregated a list of what they feel are the most important measures for their organization as well as the studies they are running. Each metric listed includes the actual item measured in (parentheses). Many of these are being incorporated into Agatha’s dashboard capabilities in our ClinOps applications suite .

Core ClinOps Metrics

These are measurements of the core ClinOps organization, intended to capture the capacity and performance of the team. In most cases, the best approach is to track them monthly and show them as column charts to illustrate trends over time.

- Number of Studies Underway (Studies)

- Studies Completed Last 12 Months (Studies)

- Number of Countries in Each Study (Countries)

- Number of Sites in Each Study (Sites)

- Number of Patients in Each Study/Phase (Patients)

- Level of Outsourcing (% of work completed in house vs. outsourced)

- Number of Vendors utilized per Study (Vendors)

- Total ClinOps Team Members (People)

- Total FTE Count (People)

- Employee versus Contractor (People)

Quality Metrics

Quality metrics are perhaps the most important set of measurements because they highlight the team’s ability to execute trials accurately and effectively.

For each study:

- Number of CAPAs created and resolved (CAPAs)

- Number of AEs/SAEs per Site/Country/Study

- Number of database queries created and resolved (Queries)

- Number of Days to data entry per Site/Study (Days)

- Number of Monitoring Visits completed and reported per CMP (Monitoring Plan)

- Number of planned and unplanned SOP deviations (Deviations)

- Number of Audits completed at Site and Study Levels (Audits)

- Number of Audits with major findings (Findings)

- Number of Major Findings resolved and time to resolution (Findings)

- Patient Population Diversity (Patients)

Financial Metrics

Money matters, and measuring the cost and use of dollars is a critical measure for the ClinOps team. While sometimes confidential, you can use percentages for the whole team to help convey the importance of staying on budget.

- Total Study Budget (Dollars)

- Budget by Category (Dollars)

- Internal Budget (Dollars)

- Contractor/Vendor Budget (Dollars)

- Budget Consumed (Dollars)

- Budget Remaining (Dollars)

- Total Invoicing (Dollars)

- Payment and Collections (Dollars)

- Aging Report (Vendors)

TMF Metrics

As the central repository of study documentation, the Trial Master File can be used as a proxy for the state of the overall study. The “What’s Missing” measure is probably the most common measure across all the ClinOps leaders I talked to.

- Total TMF Items Expected (Documents)

- Total TMF Documents Collected (%)

- Total TMF Documents Approved (%)

- Missing TMF Documents (%)

- TMF Completeness (%)

- Average Approval Time per Document (Days)

- Items Awaiting Approval (Documents)

- Artifacts QC’ed (represented as a % of total artifacts uploaded)

- Artifacts QC’ed returned with errors (%) vs. no errors (%)

Cycle Times

Cycle times are tricky because every study has its own dynamics that drive the calendar. Using an approach that compares plan to actual is therefore important when looking at the cycle times of core study activities.

- Study and/or Site level IRB Submit to IRB Approval (Days)

- Study Approval To First Enrollment (Days)

- Total Time (Days) Per Phase

- Study End to Submission (Days)

- Time LPLV to Database Lock (DBL)

- Time DBL to final CSR (Days)

As you look through this list you might find some clinical operation metrics missing. Let me know what I have missed . What do you feel are the most important things to measure in your operation?

Advarra Clinical Research Network

Onsemble Community

Diversity, Equity, & Inclusion

Participants & Advocacy

Advarra Partner Network

Community Advocacy

Institutional Review Board (IRB)

Institutional Biosafety Committee (IBC)

Data Monitoring Committee (DMC)

Endpoint Adjudication (EAC)

GxP Services

Research Compliance & Site Operations

Professional Services

Coverage Analysis

Budget Negotiation

Calendar Build

Technology Staffing

Clinical Research Site Training

Research-Ready Training

Custom eLearning

Services for

Sponsors & CROs

Sites & Institutions

BioPharma & Medical Tech

Oncology Research

Decentralized Clinical Trials

Early Phase Research

Research Staffing

Cell and Gene Therapy

Ready to Increase Your Research Productivity?

Solutions for need.

Clinical Trial Management

Clinical Data Management

Research Administration

Study Startup

Site Support and Engagement

Secure Document Exchange

Research Site Cloud

Solutions for Sites

Enterprise Institution CTMS

Health System/Network/Site CTMS

Electronic Consenting System

eSource and Electronic Data Capture

eRegulatory Management System

Research ROI Reporting

Automated Participant Payments

Life Sciences Cloud

Solutions for Sponsors/CROs

Clinical Research Experience Technology

Center for IRB Intelligence

Not Sure Where To Start?

Resource library.

White Papers

Case Studies

Ask the Experts

Frequently Asked Questions

COVID-19 Support

About Advarra

Consulting Opportunities

Leadership Team

Our Experts

Accreditation & Compliance

Join Advarra

Learn more about our company team, careers, and values. Join Advarra’s Talented team to take on engaging work in a dynamic environment.

Beginner’s Guide to Clinical Trial Performance Metrics

- Wendy Tate PhD, GStat Product Strategy Director;

There is a lot of buzz about metrics in clinical research and the need for sites to use them. But what exactly are metrics? And why should sites get excited and involved? If you are new to metrics, these are important questions to understand. Here, we answer those questions and provide tips for how to start measuring and using metrics to improve your organizations operational performance.

What are clinical trial performance metrics?

Clinical trial performance metrics (also commonly referred to as operational metrics, or key performance indicators) are data points that provide insight into operational performance. The use of metrics is two-fold: improving processes internally and strengthening relationships with sponsors.

While metrics have long been associated with site/sponsor relationships, the use of metrics among sites to improve internal processes is gaining traction.

Why should your site measure performance?

As noted above, sites can gain enormous benefit in terms of internally improving operations and working with sponsors.

Benefits for internal operations include:

- Identifying where process improvements can be made

- Identifying where resource allocations can be changed

- Effectively managing workload across teams

- Establishing performance benchmarks

- Providing data-driven rationale to leadership for additional resources

The benefits from the sponsor relationship perspective include:

- Identifying areas of strong competitive advantage

- Ability to complete site feasibility questionnaires with real data

Which key metrics should your site track?

There are many options when it comes to measuring operational performance. So where should your site begin? Specific metrics that could assist your site with internal processes and sponsor relationships include:

1) Cycle Time from Draft Budget Received from Sponsor to Budget Finalized:

The time (typically measured in days) between the date the first draft budget is received and the date the sponsor approves the budget.

What your site can learn: Long cycle times for this metric can signal your site to investigate further and identify areas where the process is being delayed. Such data can be used to start a conversation with the sponsor or CRO about finding ways to improve this process.

Sites with short cycle times for this metric can use the information to demonstrate their responsiveness and professionalism to sponsors and CROs.

2) Cycle Time from IRB Submit to IRB Approval:

The time (typically measured in days) between the date the initial submission packet is sent to the IRB and the date the protocol is approved or marked as exempt.

What your site can learn: If your site is experiencing delays in IRB approval, you can use this metric to start a conversation with your IRB about finding ways to work together to improve the approval process.

If your site routinely approves new trials in a timely fashion, sponsor and CROs will take notice. IRB approval is one of the first milestones in the life cycle of a clinical trial and the variability between sites at this step is great. Use a good track record for this metric to your advantage when promoting your site’s abilities.

3) Cycle Time from Contract Fully Executed to Open to Enrollment:

The time (typically measured in days) between the date that all signatures—internal and sponsor—are complete and the date that subjects may be enrolled.

What your site can learn: Subject accrual is a significant challenge across the industry. As with other metrics, long cycle times here could indicate your site should identify areas where the process is delayed. Then, track this metric over time to verify any changes with a positive impact.

If your site’s performance for this metric is good, be sure to leverage this in negotiating with sponsors and CROs. Getting new protocols open to accrual faster means your site has more time to enroll subjects. Sites with a history of good performance for this metric may be selected first for future trials.

How to realize success

If you can measure it, you can improve it. Knowing the numbers/results from the metrics above can help you identify weaknesses and serve as a comparison over time for trend data as well as measure progress made. Tracking metrics over time is something to build into your process and the benefits of doing this are well worth the effort.

Tagged in: institutions , sites

Wendy expertise is focused on the quantitative evaluation of clinical research administration, bringing over 20 years of clinical research experience across various roles.

Back to Resources

Ready to Advance Your Clinical Research Capabilities?

Subscribe to our monthly email.

Receive updates monthly about webinars for CEUs, white papers, podcasts, and more.

Advancing Clinical Research: Safer, Smarter, Faster ™

Copyright © 2024 Advarra. All Rights Reserved.

- Privacy Policy

- Terms of Use

- Cookie Policy

Clinical Research KPIs

Clinical research KPIs play a crucial role in measuring the success of clinical trials. They help to identify the trial’s strengths and weaknesses, improve the allocation of resources, and establish benchmarks. These KPIs encompass a wide range of aspects, including patient recruitment, adherence to the protocol, data quality, and safety. Other important metrics include time to market, trial cost, and the number of publications resulting from the research. Measuring these Clinical research KPIs can help clinical researchers to improve their trial designs, optimize patient outcomes, and accelerate the development of new treatments and therapies.

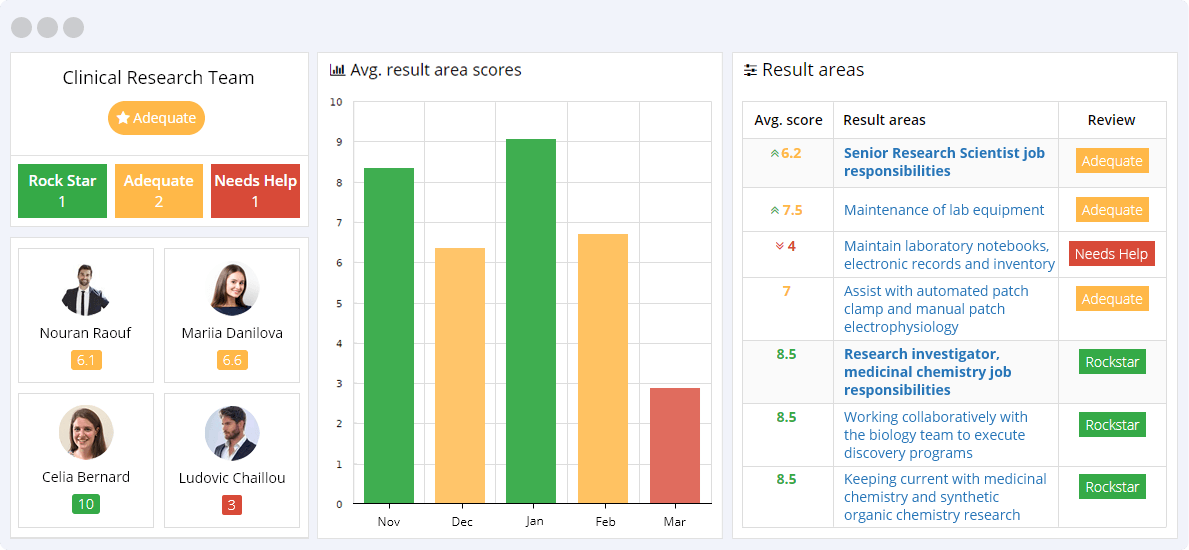

Click view all on the result area to see all corresponding clinical research KPIs

Get a free demo

Chief Scientific Officer Job Responsibilities

Chief Scientific Officer KPIs track the success of research and development, including patent filings, research publications, and the implementation of innovative technologies. They also measure the ability to lead a team, develop and execute research strategies, and drive innovation within the organization to achieve business goals. The KPIs ensure the efficient utilization of resources, successful project management, and timely delivery of results.

- Directing and mentoring the discovery team to conduct these activities, and motivating and mentoring employees for personal growth and professional achievement.

- Investigating and, where appropriate, implementing a wide variety of scientific principles and concepts to advance project goals and to identify previously unrecognized connections.

- Managing outsourced scientific activities, CROs, and seamlessly integrating them with internal work flows.

- Managing the chemistry and biology teams to execute discovery programs.

- Overseeing the designing and implementation of in-vitro and in-vivo screening paradigms directed to ion channel targets.

- Preparing and presenting to investors and corporate partners.

- Preparing and publishing manuscripts in recognized journals and presenting at meetings.

- Pursuing non-dilutive grant funding as a means for external validation of project and supplemental money.

- Review and approval of scientific data reports.

- Supporting in-licensing, collaboration, and other activities to obtain new company business, to advance key programs, and to identify opportunities for innovative research.

Clinical and Translational Med Job Responsibilities

Clinical and Translational Medicine KPIs are designed to track the performance of clinical and translational research processes. These KPIs help to ensure that research processes are efficient, effective, and productive.

- Assist in the writing and review of clinical study reports, manuscripts, and other written projects.

- Collaborate with the clinical working group and external consultants to determine strategy and tactics for clinical development plans, clinical protocols, and safety management plans.

- Contribute to business development, intellectual property, and strategic planning activities.

- Ensure compliance with all safety reporting obligations.

- Monitor and review adverse events, serious adverse events, and real-time laboratory findings from ongoing clinical trials.

- Work with the pre-clinical investigators both within and outside the group to advance clinical development and safety.

Clinical Operations and Data Management Job Responsibilities

Clinical Operations and Data Management KPIs track the performance of clinical research staff in managing and overseeing clinical trials. These KPIs measure the efficiency and effectiveness of activities such as patient enrollment, data collection, and data management, ultimately leading to successful trial completion.

- Assist in the writing and review of clinical study protocols and clinical study reports.

- Collaborate with clinical working group & external consultants to determine strategy & tactics for clinical development plans.

- Contribute to CMC oversight.

- Direct the company clinical operations and data management functions to support the clinical development.

- Formulate clinical operations and data management strategies, tactics, objectives, and performance metrics.

- Prepare for and participate in strategic and financial investor meetings.

- Serve as a member of the Company management team and contribute to the development and execution of Company strategy.

- Support Company business development and intellectual property functions.

Research Investigator, Medicinal Chemistry Job Responsibilities

The Research Investigator, Medicinal Chemistry KPIs are designed to measure the efficiency and success of the individual in the development of novel therapeutic agents. These KPIs aim to track the productivity of the Investigator in the identification and optimization of new drug leads, management of resources, and adherence to project timelines.

- Assisting in the preparation and publishing of manuscripts in recognized journals presenting at scientific meetings.

- Helping to recruit highly-qualified medicinal chemistry team members.

- Operating in a highly pragmatic and team-oriented manner to enable the research team to deliver clinical development candidates.

- Working collaboratively with the biology team to execute discovery programs with ambitious goals.

- Investigating and, where appropriate, implementing a wide variety of scientific principles and concepts to advance project goals.

- Keeping current with medicinal chemistry and synthetic organic chemistry research.

Senior Research Investigator Job Responsibilities

The Senior Research Investigator is responsible for overseeing and executing complex research projects, managing a team of scientists, and ensuring that the work is completed within the project timeline and budget. KPIs for this role include project completion rate, team performance, and successful delivery of results.

- Assist in preparing and publishing manuscripts in recognized journals and presenting at scientific meetings.

- Electrophysiological recordings from cells in culture including neurons.

- Experimental design, implementation, data collection, analysis, summarization and interpretation.

- Other duties that contribute to the success of the discovery programs.

- Preparation and maintenance of neuronal cultures.

- Use of manual patch clamp system for determining the actions of drugs on ion channel conductances.

- Where necessary, make presentations to company management and to potential and current partner companies and investors.

Senior Research Scientist Job Responsibilities

Senior Research Scientist KPIs are designed to measure the success of the senior research scientist in their role. These KPIs may include productivity metrics such as the number of publications or patents, grant acquisition success rates, team management effectiveness, and successful project outcomes. Additionally, KPIs may also measure the ability of the senior research scientist to innovate, collaborate, and develop new research strategies.

- Actively participate in working group meetings and communicate experimental results to team members.

- Assist with automated patch clamp and manual patch electrophysiology.

- Contribute to SOP development and implementing procedures according to high quality standards.

- Follow all lab safety regulations and maintain an organized and clean work space in the laboratory.

- General laboratory maintenance, including preparation of solutions and stocks.

- Hazardous waste disposal.

- Maintain laboratory notebooks, electronic records and inventory.

- Maintenance of lab equipment.

- Negotiate pricing and quotes with external vendors.

- Perform laboratory inventory checks and restock consumables as needed.

- Running cell based assays (maintenance of cell lines, use of plate reader, liquid handlers and troubleshooting as needed).

Clinical Research KPIs are fundamental to understanding the internal and external dynamics between science and human resources as they both form a vital part of the research study’s successes.

These Key Performance Indicators are useful because they focus on the study’s human resource aspects and attaining the stated benchmarks to prove or disprove the hypotheses. Because these KPIs drive the study’s optimal functioning and success rates, they strengthen and foster relationships between the study’s sponsors, participants, and research scientists.

The study’s leaders are often unaware of the impact of the internal and external dynamics on the study’s outcomes. These KPIs highlight the potential pain points between the human resource components and the study’s scientific constraints to ensure that solutions are found before the issues linked to the pain points spiral out of control and cause incalculable harm to the research study.

Benefits of AssessTEAM cloud-based employee evaluation form for your clinical research team.

- Use on all smart devices

- Include custom KPIs

- Keep historic trends

- Include eSignatures

- 360-degree feedback

- Unlimited customization

Schedule a Demo to Evaluate Clinical Research Back

An official website of the United States government

Official websites use .gov A .gov website belongs to an official government organization in the United States.

Secure .gov websites use HTTPS A lock ( Lock Locked padlock icon ) or https:// means you've safely connected to the .gov website. Share sensitive information only on official, secure websites.

- Publications

- Account settings

- Advanced Search

- Journal List

Common Metrics to Assess the Efficiency of Clinical Research

Doris mcgartland rubio.

- Author information

- Article notes

- Copyright and License information

Corresponding Author: Doris Rubio, Division of General Internal Medicine, Department of Medicine, School of Medicine, University of Pittsburgh, 200 Meyran Avenue, Suite 200, Pittsburgh, PA 15213, USA; phone 412-692-2023; fax 412-246-6954; [email protected]

Issue date 2013 Dec.

The Clinical and Translational Science Award (CTSA) Program was designed by the National Institutes of Health (NIH) to develop processes and infrastructure for clinical and translational research throughout the United States. The CTSA initiative now funds 61 institutions. In 2012, the National Center for Advancing Translational Sciences, which administers the CTSA Program, charged the Evaluation Key Function Committee of the CTSA Consortium to develop common metrics to assess the efficiency of clinical research processes and outcomes. At this writing, the committee has identified 15 metrics in 6 categories. It has also developed a standardized protocol to define, pilot-test, refine, and implement the metrics. The ultimate goal is to learn critical lessons about how to evaluate the processes and outcomes of clinical research within the CTSAs and beyond. This article describes the work involved in developing and applying common metrics and benchmarks of evaluation.

Keywords: Clinical Research, Common metrics, CTSA, Efficiency of clinical research, Evaluation

Introduction

Researchers continue to struggle with the slow pace at which findings are translated from bench to bedside and, in particular, with the amount of time required to conduct clinical trials and publish results. In cancer research, for example, Dilts and colleagues (2009) found there are almost 300 distinct processes involved in activating a phase III trial and that the median time from conception to activation is over 600 days. While many clinical trials in various fields are never completed because of recruitment and other problems, Ross and colleagues (2012) found that the results of completed trials are published within 30 months in fewer than half of cases and that the overall publication rate is only 68%.

In an attempt to improve processes involved in clinical research, the National Institutes of Health (NIH) created and funded the Clinical and Translational Science Award (CTSA) Program. Administered by the National Center for Advancing Translational Sciences (NCATS), this program was designed to develop infrastructure for clinical and translational research at different institutions throughout the United States. The CTSA initiative now funds 61 institutions and thus represents the largest NIH-funded program to date ( CTSA Central, access 2013 ). Although CTSA-funded institutions have responded to the challenge of improving the processes of clinical and translational research in various ways, they all have core entities that help improve the efficiency of research by eliminating barriers and offering specialized services to investigators. Examples of such cores include biostatistics, regulatory compliance, informatics, and clinical research facilities cores.

Recognizing the importance of evaluation, NIH has required that each institution that applies for a CTSA have an evaluation plan that explains in detail how it would evaluate its program and assess its use of funds if it were to receive a CTSA. NIH has required an evaluation plan in every Request for Applications for CTSAs since its inception in 2005. Many institutions employ a variety of evaluation methods, including surveys, bibliometric analyses, and social network analysis to gain a better understanding of teams and multidisciplinary research. Evaluators at each CTSA institution are members of the Evaluation Key Function Committee. This committee has 4 workgroups and 2 interest groups, with focuses on methodology (bibliometrics, qualitative methods, and social network analysis) and learning or defining best practices (research translational mapping and measurement, definitions, and shared resources).

The Evaluation Key Function Committee meets regularly via conference calls and annually at face-to-face meetings to share best practices in evaluation and to collaborate on evaluation projects. The purpose of the committee is not to engage in a national evaluation. In fact, during the first 6 years of the CTSA program, the NIH employed consultants to conduct a national evaluation, focusing on a summative approach and studying progress of the CTSAs, with special emphasis on the accomplishments of scholars trained through the Research Training and Education Key Function ( Rubio, Sufian, & Trochim, 2012 ). The national evaluation was not designed to generate tools or metrics for the individual institutions but, rather, to report on what the institutions with CTSAs had accomplished.

In 2012, the acting director of Division of Clinical Innovation within NCATS, where the CTSA program is managed, charged the Evaluation Key Function Committee with generating common metrics to assess the efficiency of clinical research in terms of processes and outcomes. These metrics can then be used for benchmarking that would allow each institution to see where its performance falls with regards to efficiency across CTSA funded institutions. The intent is to provide a tool for institutions to know whether or not they should engage in a process improvement so that they can improve the efficiency of clinical research at their institution. Collectively, the data can be used to document the efficiency of clinical research across all of the CTSA funded institutions.

Also in 2012, the Institute of Medicine (IOM) was charged with evaluating the CTSA Program. In their report, they argue for the need for common metrics that can be used consistently at all CTSA sites to demonstrate progress of the CTSA Program ( IOM 2013 ). The overarching goal of the CTSA program is to improve health. However, as they note, this is not feasible or practical to evaluate. One thing that the CTSA Program can do is to develop common metrics that can demonstrate improvements over time with regards to the efficiency of clinical research.

Development of Clinical Research Metrics

In an effort to develop common metrics, the chair and co-chair of the Evaluation Key Function Committee began by asking an evaluation liaison from each of the 61 institutions to meet with the principal investigator of his or her institution’s CTSA to generate a list of 5 to 8 metrics for clinical research processes and outcomes and to bring the list to the annual face-to-face meeting. At the October 2012 face to face meeting the 127 participants met in small groups and shared their metrics. Each group was assigned a facilitator and was asked to rank the metrics in order to identify the top 5–10 metrics. Afterwards, the facilitators from the small groups met to synthesize top metrics. The process resulted in 15 metrics that they believed to be the most promising and feasible to collect. The following day a clicker system was used so that the participants could rate the metrics on the importance of the metric and the feasibility of collecting data on the metric. All of the metrics were strongly endorsed by the participants.

The committee presented a list of the 15 metrics, along with the importance and feasibility scores for each metric, to the CTSA Steering Committee (CCSC), which consists of principal investigators from each CTSA institution. The CCSC gave its enthusiastic and unanimous support for the evaluation committee’s effort.

The 15 metrics ( Table 1 ) can be grouped into 6 categories: clinical research processes, careers, services used at the institution, economic return, collaboration, and products. While the rationales for most of these categories are evident, the rationales for the careers and collaboration categories deserve mention. The 2 metrics in the career category (career development and career trajectory) reflect the training of the investigators, and the 2 metrics in the collaboration category (researcher collaboration and institutional collaboration) reflect the willingness to engage in multidisciplinary approaches to conducting clinical research (i.e., investigators from different disciplines collaborating on research) and overcoming barriers to this research. Together, the training and collaboration affect the efficiency of the research endeavors.

Fifteen Metrics Developed by the Clinical and Translational Science Award (CTSA) Consortium to Assess the Efficiency of Clinical Research

The Evaluation Key Function Committee formulated a smaller workgroup (Common Metrics Workgroup) to further refine each metric. To help consistently define the metrics, the members of this workgroup examined and modified a template of measure attributes that had been developed earlier by the National Quality Measurement Clearing House (NQMC) and Agency for Healthcare Research and Quality (AHRQ, accessed 2012) . Our modified template is shown in Table 2 .

Template of Measurement Attributes to Be Used by the Clinical and Translational Science Award (CTSA) Consortium *

This template was adapted from a template created by the National Quality Measurement Clearinghouse (NQMC) and Agency for Healthcare Research and Quality (AHRQ), U.S. Department of Health and Human Services. The original template is in the public domain and is available at http://www.qualitymeasures.ahrq.gov/about/template-of-attributes.aspx (accessed December 4, 2012).

In modifying the template, the Common Metrics Workgroup recognized that descriptive data needed to be specified and collected in conjunction with each metric. The descriptive data would provide a context for the metric and enable better interpretation of the data for the metric. For example, expectations regarding the time that lapses between receipt of a grant award and recruitment of the first study subject would vary based on the type of study (phase I, II, or III) and whether the disease being studied was common or rare.

Definition of the Proposed Metrics

At this writing, these metrics have been defined: 1) time from institutional review board (IRB) submission to approval, 2) studies meeting accrual goals, and 3) time from notice of grant award to study opening ( Table 3 – 8 ). While these metrics may seem to be straightforward, their definition proved to be challenging.

IRB Completion Time (Time from IRB submission to approval)

Problems with subject recruitment.

The time from IRB submission to approval is defined as the number of days between the date that the IRB office received the IRB application for review and the date that the IRB gave final approval with no IRB-related contingencies remaining. One of the challenges in defining the metric was that some institutions require a scientific review of a protocol before the IRB reviews the protocol, while other institutions do not. For the institutions that do require a scientific review, we grappled with whether to define the first date as the date that the proposal was submitted to the IRB or the date that the scientific review was completed. The goal was to provide a definition that could be consistently applied by all institutions. Thus, whenever issues of differences arose, we used descriptive data to define the differences and refine the definitions. This approach resulted in the need to collect more data for the metrics.

When we tried to define the second metric, which is called study meeting accrual goals, but found that it was too broad to define as a single metric. We found that we needed to describe 4 metrics to capture the initial metric: studies with adequate accrual (recruitment/retention), length of time spent in recruitment, study startup time, and problems with subject recruitment.

The third metric, called time from notice of grant award to study opening, was probably the least problematic, but it also presented challenges. We ended up defining study opening as the date that the first subject provided informed consent for participation in the study. Since the prevalence of the disease being studied can greatly affect the length of time until study opening, we included information about disease prevalence in the descriptive data section.

Development of a Standardized Protocol

In addition to defining the first 3 metrics, the Common Metrics Workgroup developed a standardized protocol to do the following: define the remaining metrics, recruit CTSA institutions to pilot-test the metrics, use the results to refine the metrics, implement the refined metrics across the CTSA Consortium, and create benchmarks. The Common Metrics Workgroup recognizes the need to work with the leadership of the CTSA consortium to implement the metrics (e.g., CCSC).

A standardized protocol enables the Common Metrics Workgroup to solicit assistance from other groups that may be interested in helping to define the common metrics. Within the Evaluation Key Function Committee, for example, groups include the definitions workgroup, which has already engaged in defining key constructs of the CTSA Consortium, and the bibliometric workgroup, which could be instrumental in defining metrics regarding publications.

To help implement our protocol, we are creating a database that will contain the various lists of metrics that were brought to the 2012 face-to-face meeting of the Evaluation Key Function Committee. While our initial efforts will focus on defining the 15 metrics listed in Table 1 , the database will enable us to prioritize other metrics that need to be defined. As we progress through this process, we will strive to minimize the redundancies across the metrics and to keep the number of metrics to be implemented at a minimum. The intent is for the common metrics work to be useful, not burdensome to institutions.

Because we have a large number of metrics to consider, we anticipate that the metrics will be rolled out in waves, with definitions introduced every 4 months and with pilot-testing instituted during each new wave. For each wave, we will recruit 3–5 CTSA institutions to pilot-test the metrics for 6 weeks. During this time, we will ask each institution to gather data on at least 10 protocols and input the data into REDCap™ (Research Electronic Data Capture) which are electronic data capture tools hosted at Vanderbilt University ( Harris et al 2009 ). REDCap is a secure, web-based application designed to support data capture for research studies, providing: 1) an intuitive interface for validated data entry; 2) audit trails for tracking data manipulation and export procedures; 3) automated export procedures for seamless data downloads to common statistical packages; and 4) procedures for importing data from external sources.

At the end of 6 weeks, we will ask the piloting institutions to complete a brief survey about the feasibility of collecting data and about the barriers and obstacles they encountered. Then we will review all of the data to determine if the metric needs to be further refined. Given the diversity in how research is conducted and implemented by the CTSA institutions, we believe that the definitions will have to undergo several iterations. For example, some institutions have an electronic IRB submission and review process, while other institutions still rely on paper applications. These differences will impact the way in which data can be collected.

By collecting the data from all of the institutions in one database, we will be able to use the database for benchmarking. The long-term plan is that for each metric, each CTSA institution will be able to log in to the system, generate a report that displays the de-identified distribution of responses for the metric, and then determine where it lies on the continuum of all institutions. The benchmarking information will remain confidential for individual institutions. Institutions can use the data to determine if they should develop a process improvement plan to increase the efficiency of clinical research at their institution.

Future Directions

The future of clinical research is dependent on developing significant efficiencies for translating findings from the bench to bedside more quickly and with fewer resources. The metrics that we are working to define and implement can help move us in that direction, but common metrics are not a panacea; they are the first step in assessing several areas for possible improvement.

The overwhelming support for this work across the CTSA funded institutions and the enthusiasm of the CCSC have strengthened our commitment to establishing common metrics for clinical research. Using these common metrics, we will learn critical lessons about how to evaluate and change the processes and the outcomes of clinical research within CTSA funded institutions. We believe that this, in turn, will affect clinical research throughout the academic research community and beyond.

Time from Notice of Grant Award (NOGA) to Date of First Accrual (FA)

For a study measuring accrual achievement in cancer clinical trials, see Cheng et al. “Predicting Accrual Achievement: Monitoring Accrual Milestones of NCI-CTEP Sponsored Clinical Trials”, Clin Cancer Res , 2011 April 1; 12(7): 1247–1955.

Studies with adequate accrual (recruitment)

Length of time spent in recruitment

Study Start-up time

Acknowledgments

Funding: The project reported here was supported by the National Institutes of Health (NIH) through the Clinical and Translational Science Award (CTSA) Program. The NIH CTSA funding was awarded to the University of Pittsburgh (UL1 TR000005).

Declaration of Conflicting Interests: The author declares no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

- Agency for Healthcare Research and Quality (no date) Template of Measure Attributes. [Accessed December 4, 2012]; http://www.qualitymeasures.ahrq.gov/about/template-of-attributes.aspx .

- CTSA Central. [Accessed June 26, 2013]; https://ctsacentral.org/institutions . [ Google Scholar ]

- Dilts DM, Sandler AB, Cheng SK, Crites JS, Ferranti LB, Wu AY, Finnigan S, Friedman S, Mooney M, Abrams J. Steps and time to process clinical trials at the Cancer Therapy Evaluation Program. Journal of Clinical Oncology. 2009;27:1761–1766. doi: 10.1200/JCO.2008.19.9133. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG. Research electronic data capture (REDCap) - A metadata-driven methodology and workflow process for providing translational research informatics support. Journal of Biomedical Informatics. 2009;42:377–381. doi: 10.1016/j.jbi.2008.08.010. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- IOM (Institute of Medicine) The CTSA program at NIH: Opportunities for advancing clinical and translational research. Washington, DC: The National Academies Press; 2013. [ PubMed ] [ Google Scholar ]

- Ross JS, Tse T, Zarin DA, Xu H, Zhou L, Krumholz HM. Publication of NIH funded trials registered in ClinicalTrials.gov : Cross sectional analysis. BMJ. 2012;344:d7292. doi: 10.1136/bmj.d7292. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Rubio DM, Sufian M, Trochim WM. Strategies for a national evaluation of the Clinical and Translational Science Awards. Clinical and Translational Science. 2012;5:138–139. doi: 10.1111/j.1752-8062.2011.00381.x. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- View on publisher site

- PDF (302.8 KB)

- Collections

Similar articles

Cited by other articles, links to ncbi databases.

- Download .nbib .nbib

- Format: AMA APA MLA NLM

Add to Collections

Add Your Heading Text Here

Unlocking clinical trial success: essential kpis to monitor in a clinical trial management system (ctms).

Medha Datar

- May 13, 2023

This comprehensive guide delves into the critical role of Key Performance Indicators (KPIs) in the context of a Clinical Trial Management System (CTMS). As the biotechnological and pharmaceutical industries increasingly rely on these software systems, understanding the breadth of KPIs that a CTMS can generate is key. From enrollment progress and trial timelines to safety metrics, budget tracking, and patient satisfaction, these systems offer a centralized hub to effectively manage the multifaceted aspects of clinical trials.

Importance and Benefits:

The importance of a CTMS and its KPIs cannot be overstated. With the complexity and high stakes inherent to clinical research, effective management tools are paramount. Here are some key benefits:

- Efficiency and Accuracy: A CTMS, through its numerous KPIs, provides a single source of truth for all trial-related data, leading to more efficient and accurate trial management.

- Informed Decision-Making: KPI reports can help study managers identify potential issues early, allowing them to make data-driven decisions to keep trials on track.

- Regulatory Compliance: KPIs related to regulatory compliance help ensure that trials meet all necessary guidelines and requirements, reducing the risk of regulatory issues that could delay or halt a trial.

- Budget Management: Budget-related KPIs provide real-time insights into the financial status of the trial, supporting better financial management and planning.

- Performance Benchmarking: Site performance metrics and other KPIs can be used to benchmark performance, helping sponsors and CROs to improve trial design and management in future studies.

- Risk Management: Risk management KPIs can help study managers identify and manage potential risks, enhancing the overall integrity and safety of the trial.

- Patient-Centric Approach: KPIs such as patient satisfaction and quality of life metrics ensure that the trial is taking a patient-centric approach, which can lead to better recruitment and retention rates.

A Clinical Trial Management System (CTMS) is a software system used by biotechnological and pharmaceutical industries to manage clinical trials in clinical research. It’s a centralized system to manage all aspects of clinical trials, including planning, preparation, performance, and reporting.

Below are some key performance indicator (KPI) reports that might be generated from a CTMS:

- Enrollment Progress: This report will show the progress of patient recruitment and retention against predefined targets.

- Trial Timeline: This report tracks the trial’s progression against its initial schedule, showing any deviations and helping to identify potential delays.

- Data Entry and Quality Metrics: This report tracks the timeliness and quality of data entry into the system. It may track missing data, errors, and corrections.

- Protocol Deviations/Violations: This KPI report identifies any deviations from the protocol that could potentially impact the study’s integrity or participant safety.

- Site Performance Metrics: This report ranks the performance of the various investigative sites, considering factors like recruitment numbers, data quality, protocol adherence, and communication responsiveness.

- Safety Metrics: This includes adverse events, serious adverse events, and safety endpoint data. These metrics are critical in assessing the safety profile of the investigational product.

- Budget Metrics: This report tracks the financial aspects of the trial, such as the budget versus actual costs, per patient costs, and site payment status.

- Regulatory Compliance: This KPI report can help to ensure that the trial is adhering to all necessary regulatory guidelines and requirements.

- Vendor Management Metrics: If third-party vendors are involved in a study, this report tracks their performance and compliance with the terms of their contracts.

- Study Milestones: This report tracks the key milestones of the study, such as the first patient in, last patient in, first patient out, and last patient out, and compares them to the projected timelines.

- Screen Failure Rates: This report identifies the number of participants who were screened but did not qualify to participate in the trial. High screen failure rates may indicate problems with the inclusion/exclusion criteria or the recruitment process.

- Dropout Rates: This report monitors the number of participants who leave the trial before completion. It’s important to understand why dropouts occur to ensure the integrity of the trial and that patient rights and safety are maintained.

- Data Query Rates: This KPI report tracks the number of queries generated for data clarification. A high query rate could indicate issues with data quality or entry.

- Audit Outcomes: Audits are a crucial part of clinical trials to ensure compliance with Good Clinical Practice (GCP) and other regulations. This report would track audit findings and any subsequent corrective and preventative actions.

- Patient Visit Adherence: This report measures the percentage of completed patient visits compared to those scheduled. Missed visits could impact the trial’s data and outcomes.

- Ethics Committee Approvals: This report tracks the status and outcomes of submissions to ethics committees (or institutional review boards in the U.S.).

- Resource Utilization: This report would provide insight into the human and other resources used for the trial. It could track metrics like staff hours or equipment use.

- Investigational Product Accountability: This report ensures that the investigational product is being appropriately managed and tracked. It might track distribution, usage, and return or disposal of the product.

- Risk Management Metrics: These might include various measures of risk, such as deviations from risk thresholds, the status of risk mitigation plans, or the outcomes of risk assessments.

- Patient Satisfaction: While not always easy to measure, patient satisfaction can be a crucial indicator of a trial’s success. This could involve surveys or other feedback mechanisms.

- Amendment Frequency: The number of times the protocol has been amended. Frequent amendments might signal issues with the initial design of the trial.

- Time to Contract Approval: The time it takes to negotiate and approve contracts can impact the trial’s start date. This KPI can help identify inefficiencies in the process.

- Time to First Data Entry: This measures the time from patient enrollment to first data entry into the CTMS. Delays in data entry can impact data quality and the timeliness of analyses.

- Data Lock Timeline: This is the time it takes to lock the data after the last patient’s last visit. Speedy data lock is critical for analysis and further steps in the clinical trial process.

- Time to Database Ready for Analysis: This is the time from the last patient’s last visit until the database is clean and ready for final analysis.

- Publication Success: This KPI tracks the number of trials that result in successful peer-reviewed publications.

- Quality of Life Metrics: For some trials, it might be important to assess the impact of the intervention on the quality of life of the participants.

- Patient Demographics: Metrics related to the diversity of the patient population, such as age, gender, ethnicity, and socioeconomic status, can be important in ensuring the generalizability of the trial results.

- Data Transfer Success Rate: If data is being transferred between systems, this KPI measures the success rate of those transfers and helps identify any technical issues.

- Staff Training and Certification: This KPI tracks the completion of necessary training and certification for staff members involved in the trial.

Remember, not all these KPIs will be relevant to every trial. The specific needs of the study and the requirements of the regulatory authorities overseeing the trial should guide the selection of KPIs.

Conclusion:

In the intricate and high-stakes world of clinical research, having a robust Clinical Trial Management System (CTMS) equipped with a comprehensive suite of Key Performance Indicators (KPIs) is a game-changer. From planning and preparation, through performance, and finally to reporting, these KPIs serve as invaluable tools in ensuring the effectiveness and success of clinical trials.

The value of the KPIs highlighted in this guide is multifaceted. They offer real-time insights into the progress of the trial, shedding light on patient enrollment and retention, data quality, site performance, safety, budgeting, and much more. The data derived from these indicators not only enhances the efficiency and accuracy of trials but also enables proactive decision-making, allowing issues to be identified and addressed early on.

Moreover, these KPIs play a crucial role in regulatory compliance, a paramount aspect in any clinical trial. By tracking key metrics such as protocol deviations, audit outcomes, and ethics committee approvals, CTMS ensures trials align with all necessary guidelines and standards, mitigating any potential regulatory risks.

Further, the financial benefits of a well-utilized CTMS cannot be understated. KPIs related to budget management provide vital information on the economic aspects of the trial, facilitating better financial planning and control.

Importantly, CTMS’s role in performance benchmarking and risk management contributes significantly to the overall integrity and safety of trials. KPIs that measure site performance and risk levels enable stakeholders to make informed decisions that enhance patient safety and drive overall trial success.

Lastly, in an era where the patient-centric approach is gaining more emphasis, the capability of a CTMS to measure patient satisfaction and quality of life metrics is of paramount importance. These KPIs ensure trials align with the needs and expectations of patients, leading to better recruitment, retention, and overall patient experience.

In conclusion, the importance of a CTMS in today’s clinical research landscape is undeniable. Leveraging its comprehensive array of KPIs not only drives efficiency and compliance but also contributes to better patient outcomes, ultimately paving the way for breakthroughs in healthcare that save and improve lives. By embracing the power of these KPIs, stakeholders can navigate the complexities of clinical trials with greater confidence and precision, pushing the boundaries of what is possible in clinical research.

Cloudbyz Unified Clinical Trial Management (CTMS) is a comprehensive, integrated solution to streamline clinical trial operations. Built on the Salesforce cloud platform, our CTMS provides real-time visibility and analytics across study planning, budgeting, start-up, study management, and close-out. Cloudbyz CTMS can help you achieve greater efficiency, compliance, and quality in your clinical operations with features like automated workflows, centralized data management, and seamless collaboration. Contact us today to learn how Cloudbyz CTMS can help your organization optimize its clinical trial management processes.

To know more about the Cloudbyz Unified Clinical Trial Management Solution contact [email protected]

Request a demo specialized to your need.

Subscribe to our weekly newsletter

At Cloudbyz, our mission is to empower our clients to achieve their business goals by delivering innovative, scalable, and intuitive cloud-based solutions that enable them to streamline their operations, maximize efficiency, and drive growth. We strive to be a trusted partner, dedicated to providing exceptional service, exceptional products, and unparalleled support, while fostering a culture of innovation, collaboration, and excellence in everything we do.

Subscribe to our newsletter

CTMS CTBM eTMF EDC RTSM Safety & PV PPM

Business Consulting Services Professional Services Partners CRO Program

Blog e-Books & Brochures Videos Whitepapers News Events

About us Careers Sustainability FAQ Terms & Conditions Privacy Policy Compliance

© 2024 Cloudbyz

KPIs: how to measure success in clinical trials

Key Performance Metrics or Indicators (KPIs) are the bread and butter of every successful business; hence, why they are one of the essential components of clinical research. KPIs help us understand the clinical recruitment process better - from the first contact with potential candidates to the final visit. Essential to initial planning and crucial to the operation of phase 1 clinical trials, understanding your KPIs, your strengths and your weaknesses, ultimately makes establishing benchmarks and allocating precious resources that bit easier. If that’s not enough, KPIs - being at the heart of your business plan - are they key to strengthening your relationship with clinical trial sponsors, which could give you a competitive advantage.

Today, we take a look at some of the key KPIs research sites should be tracking , and consider how they can improve your current clinical protocol and patient recruitment strategy. Check out our breakdown below.

From "open for enrollment" to "enrollment quota met"

This KPI is all about keeping an eye on the clock, from the start of recruitment until the moment you hit your clinical trial enrollment quota. The shorter this KPI is, the better for your organisation AND the happier your sponsor will be. This seems like a no-brainer, but it is easier than it seems to get lost in your work and lose track of time. As you may be aware, according to a Pharmafile study , around 50% of clinical trials are delayed due to patient recruitment issues . Longer cycles or delays implementing initial patient engagement metrics can indicate that you need to rethink your clinical recruitment procedure.

If your site’s performance for this KPI is good, i.e. your enrollment quota is met on time or - even better - earlier, then you can leverage this in further negotiations with the sponsor. From our own experience, research sites with a shorter time frame from “open to enrollment” until “enrollment quota met” are more likely to get selected first for future trials with clinical trial sponsors.

Cost per enrolled subject

Research sites with a tight budget will agree that the “cost per enrolled subject” should always be a key KPI. Costs are almost always linked with the efficiency of the recruitment method. So, it’s important to remember that a recruitment channel who looks at first sight more expensive can turn out to be more efficient and therefore more cost-effective than a “cheaper” option in the long-run.

Staying in budget and choosing the most cost-efficient recruitment method is, of course, important in order to stay competitive and profitable. Consider our following example of CRO X and CRO Y carefully.

CRO X employs outreach via a local newspaper ad for $250 per week. Per month, this is $1,000. Typically response rates from newspaper ads are not high, and CRO X gets on average 30 potential subjects per month. This is more than $30 per referral, and these potential subjects are not pre-qualified.

Unfortunately, 70% of the people that responded to CRO X’s ad are not eligible for the trial, which reduces the number of patients to 9 and ups the cost to more than $100 per subject. The trial is now delayed. The sponsor is not satisfied, meaning CRO X will not be the first choice for a next trial. This, in turn, brings CRO X back to square one, back to evaluating their basic KPI.

CRO Y, on the other hand, decides to engage with patient recruitment companies, who charge a standard performance-based fee per referral. This looks, at first, more costly than the newspaper ad, but it pays off. Trial recruitment is a lot faster and more efficient. In fact, the CRO meets the clinical trial enrollment quota before the official deadline. What’s more, through a pre-qualification process of potential subjects, the error rate in recruitment is significantly lower. The sponsor is very satisfied and will re-engage CRO Y without hesitation.

As you can see from this example, cost is strongly tied to trial efficiency and the overall time-frame of recruitment. Consider your initial investments carefully as supplying a solid amount of capital into your clinical study will build the strong foundations to support the remainder of your trial operations.

From IRB submit to IRB approval

It’s essential that research sites track the days between submitting their trial protocol to the Institutional Review Board (IRB) and when it is approved. Sometimes, IRB approval can be painfully slow and seems to delay the whole endeavour of conducting a trial. When a site experiences these delays, it is normally a sign that their submission lacks information the IRB requires. The issues vary from application to application, so it is essential that your trial recruitment and clinical protocol is carefully planned as part of your initial feasibility analysis.

Talk to your IRB about the delays directly and make sure to establish a person-to-person point of contact in order to resolve any issues quickly. As you know, IRB approval is usually one of the first milestones in the life cycle of a clinical trial. Use your good track records to your advantage and promote your site accordingly.

Trial retention statistics

Retention, broadly speaking, refers to how many patients clinical studies retain over the course of a single trial. This is a problem almost every research site faces. With an average 30% of participants dropping out over the course of a clinical study , it is no wonder that 85% of clinical trials fail to retain enough patients to operate! While some drop-outs are unfortunately uncontrollable, others are highly preventable.

Retention is all about knowing your audience and being open to fulfilling even the smallest of their needs. Go the extra mile, do the research and work out a general profile for your potential patients. Look to national consensuses and wider social surveys for general trends, with the following questions in mind:

What are their priorities in employment, family life, leisure etc.?

What forms of communication do they use regularly?

What are the lifestyle choices that inform their opinions?

After answering these questions, you should find that your answers make up the basis for your patient-care strategy. However, given the current climate, it may be wise for research operators to look beyond these answers and invest in more secure and reliable methods of retention - sparing them vital time to plan and implement patient-centric care metrics throughout their study. This is, of course, where patient recruitment companies come in.

Not that we are biased, but if we were to recommend any one company for the job it would have to be Citruslabs. Linked to our #1 health app in 17 countries , Mindmate, our patient recruitment dashboard directly connects researchers to over 3 million registered patients . Now, we would say that other models are available - but this would be a lie. In fact, our easy-to-use dashboard is the first-of-its-kind for the market ; offering researchers a unique insight into patients’ wants and needs via industry-leading technology. The future of patient recruitment starts here.

Still a little unsure? Check out what our customers have to say about us here .

Interested in finding out more? Get in touch with one of our team here , and check out archives for all our top tips and tricks on running a successful clinical trial in today's constantly changing industry.

Recent Posts

How the Placebo Effect Shapes Product Performance

The Science Behind Double Blind Studies

Why Contract Research Organizations Are the Future

Your session is about to expire

Clinical trial performance metrics, the power of clinical trial performance metrics.

As the saying goes, “If you can measure it, you can improve it.” In this article, we explore how this relates to site selection and monitoring for clinical trial sponsors, as well as to improving performance for sites.

A growing body of research has demonstrated that performance analytics can optimize clinical research efforts across multiple dimensions. Tracking the right performance metrics can help sponsors efficiently identify potential high-performing sites and also quickly address inefficiencies or bottlenecks through effective site monitoring during the trial. For trial sites themselves, clinical trial metrics can be used internally to improve process efficiency. In this article, we discuss the concepts of performance metrics and key performance indicators, and explore the various benefits of performance analytics for trial operations as well as how the practice is changing amidst new technological advancements.

What are clinical trial performance metrics or quality performance measures?

Clinical trial performance metrics are measures of a trial site’s operational performance. 1 , 2

Also known as clinical research site performance metrics, these indicators provide insight into efficiency, issues, and risks at each trial site. Clinical trial performance metrics can be used by both sponsors and trial sites to analyze site operations, evaluate performance, and make improvements when necessary.

What is a clinical KPI?

Clinical KPI refers to key performance indicators; specific operational measures that are deemed to be the most useful/insightful for monitoring and assessing the current operational performance of a trial site.

The term KPI is often used interchangeably with clinical trial performance metrics, although it has been suggested that KPIs differ slightly from modern performance analytics in that KPIs indicate what process needs to be assessed, while performance analytics can reveal the actual cause of the problem.3 Let’s take the issue of a low enrollment rate, for example. A traditional KPI may reveal that the enrollment rate is low, but might not offer further insight into the causes. Modern performance analytics might be able to provide sponsors with deeper insights into the reasons behind this low enrollment rate by leveraging more comprehensive data. In this example, performance analytics may reveal that the low enrollment rate seems to be due to strict inclusion criteria.

The utility of measuring site performance

There are several potential benefits for both sponsors and trial sites in measuring clinical performance metrics. In addition to facilitating streamlined site monitoring and assessments, they also support the development of actionable plans that can lead to improved trial outcomes. 1 , 2

For sponsors, well-selected performance metrics can improve the efficiency of the site selection stage, provide ongoing insights into trial operations while a study is underway, help with the quick identification of any inefficiencies, and support data-driven decision-making to address such inefficiencies, for example by reallocating resources, re-training staff, or re-calibrating on-site equipment/devices.

For sites, clinical trial performance metrics provide vital, easily-monitored insights into internal operations and can help managers distribute workloads effectively across teams, streamline processes, and identify areas where improvements need to be made. Moreover, consistent measurement of performance metrics allows sites to provide practical, real-world data and historical records to sponsors to act as proof of their capabilities and responses to site feasibility questionnaires.

So, what are some of the performance metrics commonly used to assess the efficiency of clinical research?

How to measure clinical performance: Examples of clinical performance measures and models

A variety of metrics can be used to measure a site’s operational performance, but it is important to focus on those that provide valuable, actionable insights. In other words, don’t get carried away measuring and monitoring everything just because it’s possible and relatively simple with modern technology. The monitoring operation should be designed to provide simplified, streamlined insights that are explicitly relevant to the goals of the sponsor and/or site, which can be acted upon quickly in order to produce effective improvements. Below, we discuss some key performance metrics that may be worth considering and possible ways to group them to simplify oversight - again, the final selection should be based on the metrics that are most relevant to the objectives at hand, with special attention given to high-risk areas/operations.

Categories of clinical trial performance metrics

Analyzing hundreds of individual metrics would quickly become overwhelming and could lead to more confusion than actual benefit. If there is a significant number of performance metrics you believe are worth monitoring, grouping them together may be a good idea to give some structure to the assessment and enable broader insights specific to certain areas/types of operations.

Broadly speaking, performance metrics can be grouped:

- into “standard” clinical metrics (applicable to various studies conducted by the same sponsor or site) and study-specific metrics (unique metrics that might only apply to a single trial)

- according to the stage of the trial (startup, site maintenance, and site close-out metrics)

- by the aspect of the trial being measured, such as patient safety or budget adherence. 4 , 5

Standard clinical trial performance metrics versus study-specific metrics

Sponsors may find it helpful to establish a set of standardized quality metrics; those that can be measured across all sites to help assess and compare the performance of those sites, regardless of the study being conducted. There are many factors that are unchanging between different trials, so establishing standard quality performance indicators can save time and help solidify workflows.

However, there may be a handful of study-specific metrics which could be useful for capturing important yet unique aspects of an individual study. These can be updated on a per-trial basis to act as key performance indicators of uniquely important aspects, while still monitoring the standardized metrics to support inter-study comparisons and avoid the need to update all performance metrics for every study.

Grouping according to study phase: Startup, maintenance, and close-out metrics

Classifying clinical trial performance metrics according to the stage of the study can help sponsors conceptualize trial operations throughout the trial lifecycle. This approach is useful for identifying where bottlenecks or setbacks that lead to costly delays are occurring, and thus revealing which general aspects require attention for improving overall efficiency and shortening study timelines.

Examples of specific metrics include the following:

- Startup metrics: cycle times for investigator recruitment, draft budget reception to finalization, site activation, IRB submission to approval, and other cycle times; regulatory approval rate. Generally, shorter cycle times for various aspects of study start-up indicate efficient internal processes and high degree of professionalism amongst site staff. 3

- Maintenance metrics: participant accrual/enrollment rate, screen failure rate, data quality (completeness of informed consent forms, data accuracy, data handling practices and site logs, etc.) compliance (i.e., absence of non-compliance), adherence to protocol, patients’ treatment adherence, drop-out/attrition rate, and timelines such as cycle time to database lock, etc.

- Close-out metrics: last patient last visit (LPLV), time to site closure, follow-up rate, supply disposal, data cleanliness, document archival, submission of close-out report to IRB, etc.

Grouped by operational/functional concept: Patient safety, data quality, cycle times, budget adherence, etc.

Categorizing metrics by the various operational and functional concepts of trial operations can help you group common measures with the same units, such as money (e.g., dollars) and time (e.g., days). This may provide useful, broader oversight into performance as it relates to specific areas of activity. For example, perhaps the timeline was followed perfectly but data quality suffered as a result of rushed activities. In the case of monetary metrics, sponsors can assess how resources were allocated and spent, if site allocations were sufficient, and if there are any areas where costs could be cut for future studies.

Clinical trial performance metrics and KPIs examples

A 2018 study surveyed clinical research professionals to suggest a list of core performance metrics from amongst over 115 clinical research site performance metrics that had been used to track and assess study performance.5 The researchers hoped to provide some clarity in terms of what metrics may be considered globally important across various trials. As mentioned, it is important for sponsors and sites to carefully select performance metrics that are directly relevant to their study, whether in terms of operational efficiency, final outcomes, or otherwise. The following list includes some examples of specific clinical trial performance metrics:

1. Recruitment/enrollment indicators

- Percentage of target enrollment numbers achieved

- Total number of participants enrolled

- Screen failure rate

- Percentage of participants screened who were randomized

- Percentage of eligible participants who consented

2. Retention statistics

- Percentage of participants who completed the trial

- Number or percentage of participants who withdrew consent

- Percentage of enrolled participants lost to follow-up

3. Cycle times

- Time from site activation to first enrollment

- Time from IRB submission to approval

- Time from draft budget received to final approval

- Time taken to respond to site feasibility questionnaire

4. Data quality, compliance, and patient safety indicators

- Percentage of participants with complete data

- Percentage of participants with a query for primary outcome data

- Number of adverse reactions per enrolled participant

- Time to query resolution

- Participants with at least one protocol compliance violation

- Number of late visits

- Number of major audit findings

The evolution of performance analytics alongside emerging technologies in clinical trials

Clinical trial performance metrics and performance analytics capabilities are evolving as clinical trials increasingly adopt technological tools such as electronic data capture (EDC), clinical trial management systems (CTMS), and clinical data management systems (CDMS).

Many of these solutions have advanced functionalities that allow sponsors and sites to readily visualize various performance metrics to gain deep insights into data quality, patient safety, compliance, and other factors, with minimal manual involvement.

Moreover, the adoption of these tech solutions has digitized many manual processes and improved communication across teams, which in many cases has improved site performance overall - and especially in aspects such as quality control and risk management .

The future of performance analytics in clinical trials is promising, with advanced capabilities arising within the landscape of technological advances, predictive modeling, machine learning, and artificial intelligence. 6

Clinical trial performance metrics are insightful indicators that are useful for sponsors in terms of selecting high-performing sites and monitoring their performance, and for sites in terms of improving internal operational efficiency and accuracy. There are essentially innumerable performance metrics that could be monitored, but it’s important that only the most relevant are identified such that the performance monitoring strategy provides clear and actionable insights that support effective decision-making.

Sponsors and sites could consider categorizing clinical performance metrics to provide more structured oversight, as well as adopting digital solutions to enhance and streamline performance analytics capabilities by leveraging increased automation and systems integration. As new clinical trial models and possibilities continue to emerge and develop alongside new technologies, clinical trial performance metrics and analytics will become increasingly powerful; if employed intelligently, these advancements could result in enhanced patient safety while reducing study timelines and time-to-market for new therapies.

Other Trials to Consider

Lidocaine 2% Jelly

Juggerstitch meniscal repair device, fludeoxyglucose f-18, transcranial magnetic stimulation, dietary nitrate, potassium nitrate (kno3) treatment arm, pain stimulus, participant with rectal adenocarcinoma, popular categories.

Spinal Cord Injury Clinical Trials 2024

Soft Tissue Sarcoma Clinical Trials 2024

Januvia Clinical Trials

Paid Clinical Trials in Pennsylvania

Paid Clinical Trials in New Jersey

Paid Clinical Trials in Virginia

Paid Clinical Trials in Iowa

Paroxysmal Supraventricular Tachycardia Clinical Trials 2023

Tuberculosis Clinical Trials 2023

Plexiform Neurofibroma Clinical Trials 2023

Popular guides.

Learn More About These Treatments

Procalamine

Antitussive

Clindamycin Phosphate And Benzoyl Peroxide

Spironolactone And Hydrochlorothiazide

Maximize Growth And Profitability With These Powerful Metrics

By: Lisa Rodriguez, Senior Account Manager

Monitoring Key Performance Indicators (KPIs) is essential to ensuring the profitability and growth potential of your clinical research site. A frequent review of established KPIs gives you the opportunity to identify trends, track progress, pivot/re-strategize when goals are not being met, or simply provide peace of mind when things are on track. So what metrics are most important to track? Since there are so many different Site metrics and they can vary by Site and study, here’s a review of four “key” metrics that are relevant for every site.

Study Start-Up

Many Sponsors and CROs keep metrics on a Site’s study start-up performance. Historic start-up metrics could potentially play a part in your Site selection outcome, so it only makes sense for a Site to track this data as well. A few key study start-up metrics that are essential to success include:

- Time elapsed from study award to Site Initiation Visit (SIV)/activation

- Time elapsed from contract/budget received to fully executed contract

- Time elapsed from receipt of regulatory packet to regulatory submission to sponsor/CRO

- Time elapsed from IRB submission to IRB approval

By tracking these and other study start-up metrics, Sites can identify areas of improvement to speed up internal processes, ensuring Site goals are met and they remain competitive for future Site selection opportunities.

Study Recruitment and Enrollment

There is no successful study without successful recruitment and enrollment. A site must keep a weekly, if not daily, pulse on their recruitment efforts and outcomes, to ensure goals are met. A few key study recruitment metrics include:

- Number of contact attempts (phone calls, emails, texts, etc.) to reach potential subjects

- Number of scheduled Screening Visits