Top 10 Data Analyst Interview Questions and Answers [Updated 2024]

Andre Mendes

November 24, 2024

Data Analyst Interview Questions

Can you explain a situation where you used data analysis to solve a difficult problem.

How to Answer The interviewer wants to understand your problem-solving skills and how you apply data analysis to solve challenges. Your answer should include the problem you faced, the steps you took to address it, the data analysis techniques you used, and the results of your efforts.

Sample Answer In my previous role at XYZ Company, we were facing a significant decline in product sales. I was tasked with identifying the cause and suggesting solutions. I decided to analyze our sales data for the past two years using regression analysis. I found that sales were low in regions where we had the least marketing efforts. Based on this analysis, I suggested increasing our marketing effort in these regions. After implementing this, we saw a 20% increase in sales over the next quarter.

👩🏫🚀 Get personalized feedback while you practice — start improving today

Can you describe a time when you had to present data analysis results to a non-technical audience? How did you ensure they understood your findings?

How to Answer The best way to answer this question is to demonstrate your ability to simplify complex data and communicate effectively. Start by outlining the situation and why the presentation was necessary. Then, describe the steps you took to make the information more understandable, such as using visual aids, simplifying the language, or providing real-world examples. Finally, explain the outcome of the presentation and any feedback you received.

Sample Answer In my previous role at XYZ company, I was tasked with analyzing customer data and presenting the findings to our marketing team, which didn’t have a technical background. I knew that just presenting the raw data wouldn’t be helpful, so I decided to use a more visual approach. I created a few PowerPoint slides with charts and graphs that clearly showed trends and patterns in the data. I also provided context by comparing these trends to specific marketing campaigns. After the presentation, several team members commented on how easy it was to understand the data, and the marketing director used my findings to adjust their strategy for the next quarter.

🏆 Ace your interview — practice this and other key questions today here

Can you describe a time when you used a particular data analysis tool or technique that significantly improved the outcome of a project?

How to Answer In your answer, you should describe the situation of the project and the problem you faced. Explain why you chose that particular tool or technique, how you implemented it, and how it improved the project’s outcome. Be specific about the results and any metrics showing the improvement. Lastly, reflect on what you learned from this experience.

Sample Answer In my previous role at XYZ Company, we had a project that required us to forecast future sales for a new product. The traditional linear regression model we initially used wasn’t yielding accurate results due to the complexity and variability of our data. I decided to implement a machine learning technique, specifically a Random Forest model, due to its strength in handling complex and non-linear data. After cleaning and preparing our data, I trained the model and it significantly improved our forecast accuracy by 30%. This resulted in better planning and allocation of resources, which ultimately saved the company about $100,000 in the first quarter alone. This experience taught me the value of exploring and implementing advanced techniques when traditional methods fall short.

Land Your Dream Data Analyst Job: Your Ultimate Interview Guide

Expert Strategies to Stand Out and Get Hired

🚀 Conquer Interview Nerves : Master techniques designed for Data Analyst professionals. 🌟 Showcase Your Expertise : Learn how to highlight your unique skills 🗣️ Communicate with Confidence : Build genuine connections with interviewers. 🎯 Ace Every Stage : From tough interview questions to salary negotiations—we’ve got you covered.

Don’t Leave Your Dream Job to Chance! Get Instant Access

How do you handle missing or inconsistent data in your datasets?

How to Answer This question tests your problem-solving skills and your ability to work with imperfect data. You should answer by explaining the steps you typically take to deal with this common issue. Mention any specific tools or techniques you use.

Sample Answer Whenever I encounter missing or inconsistent data, I first try to understand the nature and extent of the issue. I use visualizations and summary statistics to identify patterns in the missing or inconsistent data. Depending on the situation, I might use imputation methods to fill in missing data or apply data cleaning techniques to correct inconsistencies. In some cases, it might be necessary to consult with subject matter experts or to go back to the data source for clarification. I also ensure that any data manipulation I do is properly documented for transparency and reproducibility. I use tools such as Python’s pandas library and R’s tidyr and mice packages to help with these tasks.

How would you determine the key variables that have the most impact on an outcome in a dataset?

How to Answer The candidate should describe how they use statistical techniques, such as correlation or regression analysis, to identify key variables. They should also explain how they would ensure the validity of their findings, for example, by checking for confounding variables or using cross-validation techniques.

Sample Answer I would start by performing a correlation analysis to identify the variables that are most strongly associated with the outcome. Then, I would use regression analysis to quantify the impact of these variables on the outcome, while controlling for other variables. However, correlation does not imply causation, so it’s important to also consider the context and possible confounding variables. For validation, I might split the data into a training set and a test set, and see if the model built on the training set also works well on the test set.

💡 Click to practice this and numerous other questions with expert guidance

Tell me about a time when you had to use complex algorithms for data analysis. What was the project and how did you implement them?

How to Answer When answering this question, it’s important to first briefly describe the project and its objectives. Then, discuss the specific algorithms you used, why you chose them, and how you implemented them in your analysis. Be specific about the process and the results you achieved. You can also mention any challenges you faced along the way and how you overcame them.

Sample Answer In my previous role at XYZ Corp, I was asked to develop a customer segmentation model for our marketing team. The objective was to identify different customer segments based on purchasing behavior. I decided to use the K-means clustering algorithm for this task. I chose this algorithm because it’s particularly effective for segmentation tasks and our dataset was large and high-dimensional. I implemented the algorithm using Python’s Scikit-learn library. The results were impressive; we were able to identify five distinct customer segments, which helped the marketing team to tailor their strategies more effectively. The main challenge was tuning the algorithm’s parameters to ensure optimal clustering, but I overcame this by implementing a grid search approach.

Tell me about a time when you had to analyze large volumes of data. What were the challenges and how did you overcome them?

How to Answer When answering this question, try to focus on the specific challenges you faced while analyzing large datasets. Discuss the methods, techniques, or tools you used to overcome these challenges. It’s crucial to show how you strategized to manage the data, ensure its quality, and derive insights that helped in decision-making. Also, illustrate your problem-solving skills and ability to work under pressure.

Sample Answer In my previous role at XYZ Inc., I worked on a project that required the analysis of an extensive customer data set for a market segmentation initiative. The challenge was the sheer volume of the data and the short deadline for the project. To manage the data, I used SQL for querying and data manipulation. For data cleaning and preprocessing, I employed Python libraries like Pandas and NumPy. The most significant challenge was ensuring the accuracy of the data. I addressed this by implementing rigorous error-checking procedures and cross-validating the results. Despite the pressure, I was able to deliver the project on time, and the insights derived from the analysis significantly influenced our marketing strategy.

📚 Practice this and many other questions with expert feedback here

Describe a situation where you had to clean a large dataset before analysis. How did you go about it?

How to Answer In your response, highlight your skills in handling data preprocessing which includes techniques such as dealing with missing values, outliers, and duplicates. Also, mention the tools or programming languages you used in the process. Demonstrate your attention to detail, decision-making skills, and your understanding of the importance of clean data for accurate analysis.

Sample Answer In my previous role at XYZ Corp, I was given a project that involved analyzing a dataset of over a million records. The data was cluttered with missing values, duplicates, and outliers. I started by identifying and handling the missing values. For numerical data, I used mean imputation and for categorical data, I used mode imputation. For the outliers, I used the IQR method to detect them and decided to cap them to avoid loss of data. For duplicates, I used the ‘duplicated()’ function in Python to identify and remove them. The whole process was quite challenging but it significantly improved the quality of our analysis and the accuracy of our predictive model.

Tell me about a time when you had to use data to forecast a future trend or outcome. What was your approach and what were the results?

How to Answer When answering this question, focus on a specific project or situation where you used data to make a prediction. Explain the tools and techniques you used for forecasting, the process you followed, and the results you achieved. Highlight your understanding of predictive analytics and your ability to use data to inform strategic decisions.

Sample Answer In my previous job, we wanted to anticipate the future sales of a new product. Using historical sales data of similar products, I built a predictive model in Python using a time series forecasting technique known as ARIMA. I split the data into a training set and a test set and used the training set to train the model. After tuning the model parameters, it was able to predict the test set with high accuracy. The model predicted a 20% increase in sales for the new product in the first quarter, which was fairly close to the actual increase of 22%. This forecast helped the company prepare adequately for the launch and manage inventory efficiently.

Can you describe your process for validating the results of a data analysis?

How to Answer Your answer should demonstrate your analytical skills and your attention to detail. Describe the steps you take to ensure the accuracy of your data analysis, such as cross-referencing your results with other data, using different methods to arrive at the same conclusion, or testing your model on different datasets. You can also mention any tools or techniques you use to validate your results.

Sample Answer After completing a data analysis, I validate the results in several ways. First, I cross-check my results with other data that is available. For instance, if I’m analyzing sales data for a particular product, I might check my results against the overall sales trends for that product category. Second, I often use different methods to see if they produce the same results. For example, I might use both a regression analysis and a decision tree to predict the same outcome, and then compare the results. If they’re significantly different, that’s a sign that I need to look more closely at my data or my methods. Finally, I use tools like Python’s Scikit-learn to validate my models. It provides a variety of metrics that I can use to assess the accuracy of my model, and it also allows me to easily test my model on different datasets.

💪 Boost your confidence — practice this and countless questions with our help today

Download Data Analyst Interview Questions in PDF

To make your preparation even more convenient, we’ve compiled all these top Data Analyst interview questions and answers into a handy PDF.

Click the button below to download the PDF and have easy access to these essential questions anytime, anywhere:

Data Analyst Job Title Summary

Related posts, top 10 tableau interview questions and answers [updated 2024], top 10 statistics position interview questions [updated 2024], top 10 data scientist interview questions and answers [updated 2024], top 10 data manager interview questions and answers [updated 2024], top 10 data architect interview questions and answers [updated 2024], top 10 business intelligence analyst interview questions [updated 2024], top 10 big data interview questions you should prepare for [updated 2024], 10 essential analyst interview questions and answers [updated 2024].

© 2024 Mock Interview Pro

- https://www.facebook.com/mockinterviewpro

Career and Interviews 2 days ago 1

- Ace Your 2025 Data Analyst Interview: 66 Questions and Answers

1. Introduction to Data Analyst Interviews

2. technical skills: sql and databases, 2.1 basic sql queries, 2.2 joins and aggregations, 3. data analysis and visualization, 3.1 data visualization tools, 4. statistical analysis, 4.1 descriptive statistics, 4.2 hypothesis testing, 5. programming and scripting, 5.1 python for data analysis, 5.2 r for data analysis, 6. data wrangling and cleaning, 6.1 handling missing values, 6.2 dealing with outliers, 7. machine learning basics, 7.1 supervised learning, 7.2 unsupervised learning, 8. business acumen and domain knowledge, 8.1 understanding business metrics, 8.2 data-driven decision making, 9. behavioral and situational questions, 9.1 problem-solving, 9.2 teamwork and communication, 10. case studies and practical examples, 10.1 retail sales analysis, 10.2 customer segmentation, q: what are the most important skills for a data analyst in 2025, q: how can i improve my data visualization skills, q: what is the best way to prepare for a data analyst interview, q: how important is domain knowledge in data analysis, you might also like:.

So, you're gearing up for a data analyst interview in 2025? Awesome! The field's evolving fast, and staying ahead of the curve is crucial. This guide's got you covered with 66 data analyst interview questions and answers tailored for 2025. We'll dive into everything from technical skills to behavioral questions, ensuring you're ready to nail that interview.

Data analyst interviews can be intense. They typically involve a mix of technical questions, case studies, and behavioral assessments. Companies want to see if you can handle data, think critically, and communicate effectively. Let's start with the basics.

SQL is the backbone of data analysis. You'll need to be comfortable with queries, joins, and aggregations. Here are some key questions:

- Q: Write a SQL query to select all records from a table named 'employees'.

SELECT FROM employees;

- Q: How would you select the top 5 highest-paid employees?

SELECT FROM employees ORDER BY salary DESC LIMIT 5;

- Q: Explain the difference between INNER JOIN, LEFT JOIN, and RIGHT JOIN.

A: An INNER JOIN returns records that have matching values in both tables. A LEFT JOIN returns all records from the left table, and the matched records from the right table. The result is NULL from the right side, if there is no match. A RIGHT JOIN returns all records from the right table, and the matched records from the left table. The result is NULL from the left side, when there is no match.

- Q: Write a query to find the total sales for each department.

SELECT department, SUM(sales) FROM sales_data GROUP BY department;

Data visualization is crucial for communicating insights. Tools like Tableau, Power BI, and Python libraries like Matplotlib and Seaborn are essential. Let's dive into some questions:

- Q: What is the difference between Tableau and Power BI?

A: Tableau is known for its user-friendly interface and strong visualization capabilities. It's great for creating interactive dashboards. Power BI , on the other hand, integrates well with Microsoft products and offers robust data modeling features. It's a bit more technical but very powerful.

- Q: How would you create a bar chart in Matplotlib?

import matplotlib.pyplot as plt import numpy as np # Data to plot labels = ['A', 'B', 'C', 'D'] values = [1, 4, 9, 16] # Create bars plt.bar(labels, values) # Create names on the x-axis plt.xlabel('Categories') # Create names on the y-axis plt.ylabel('Values') # Show graphic plt.show()

Statistics is the foundation of data analysis. You need to understand distributions, hypothesis testing, and regression analysis. Here are some key questions:

- Q: What is the difference between mean, median, and mode?

A: The mean is the average value, calculated by summing all values and dividing by the number of values. The median is the middle value when the data is ordered. The mode is the value that appears most frequently.

- Q: How would you calculate the standard deviation?

A: The standard deviation measures the amount of variation or dispersion in a set of values. It's calculated as the square root of the variance.

- Q: Explain the concept of a p-value.

A: A p-value is a measure of the evidence against a null hypothesis. A small p-value (typically ≤ 0.05) indicates strong evidence against the null hypothesis, so you reject the null hypothesis.

- Q: What is the difference between Type I and Type II errors?

A: A Type I error occurs when you reject a true null hypothesis. A Type II error occurs when you fail to reject a false null hypothesis.

Python and R are the go-to languages for data analysis. You need to be comfortable with data manipulation, cleaning, and analysis. Here are some key questions:

- Q: How would you read a CSV file in Python?

import pandas as pd df = pd.read_csv('file.csv')

- Q: How would you handle missing values in a dataset?

A: You can handle missing values by either removing them or imputing them with mean, median, or mode values. For example:

df.dropna(inplace=True) # or df.fillna(df.mean(), inplace=True)

- Q: How would you read a CSV file in R?

- Q: How would you create a scatter plot in R?

plot(df$x, df$y, main='Scatter Plot', xlab='X', ylab='Y')

Data is rarely clean. You need to be able to handle missing values, outliers, and inconsistent data. Here are some key questions:

- Q: What strategies would you use to handle missing values?

A: You can handle missing values by:

- Removing rows or columns with missing values.

- Imputing missing values with mean, median, or mode.

- Using algorithms that can handle missing values, like decision trees.

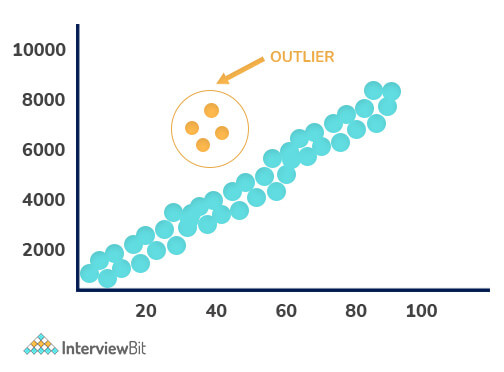

- Q: How would you identify and handle outliers?

A: You can identify outliers using statistical methods like the Z-score or IQR method. Once identified, you can handle outliers by:

- Removing them if they are errors.

- Transforming them if they are valid but extreme values.

- Using robust statistical methods that are less affected by outliers.

Machine learning is becoming increasingly important in data analysis. You need to understand the basics of supervised and unsupervised learning. Here are some key questions:

- Q: What is the difference between regression and classification?

A: Regression is used for predicting continuous values, while classification is used for predicting categorical labels.

- Q: How would you evaluate a regression model?

A: You can evaluate a regression model using metrics like Mean Absolute Error (MAE), Mean Squared Error (MSE), and R-squared.

- Q: What is clustering and how would you evaluate a clustering model?

A: Clustering is a type of unsupervised learning used to group similar data points together. You can evaluate a clustering model using metrics like the Silhouette Score or Davies-Bouldin Index.

- Q: What is dimensionality reduction and why is it important?

A: Dimensionality reduction is the process of reducing the number of random variables under consideration by obtaining a set of principal variables. It's important for simplifying models, reducing noise, and improving visualization.

Data analysis isn't just about the numbers; it's about understanding the business context. You need to be able to translate data insights into actionable business recommendations. Here are some key questions:

- Q: What is customer lifetime value (CLV) and how would you calculate it?

A: Customer Lifetime Value (CLV) is a prediction of the net profit attributed to the entire future relationship with a customer. It's calculated as the present value of the future cash flows attributed to the customer relationship.

- Q: How would you measure customer churn?

A: Customer churn is measured as the percentage of customers who stop using your product or service within a given time frame. It's calculated as the number of churned customers divided by the total number of customers at the start of the period.

- Q: How would you use data to inform a marketing strategy?

A: You can use data to inform a marketing strategy by analyzing customer segmentation, identifying high-value customers, and optimizing marketing spend based on ROI analysis.

- Q: What is A/B testing and how would you implement it?

A: A/B testing is a statistical way to compare two (or more) versions of a single variable, typically by testing a subject's response to variant A against variant B, and determining which of the two variants is more effective.

Behavioral questions are designed to understand how you think, solve problems, and work with others. Here are some key questions:

- Q: Describe a time when you had to analyze a complex dataset. How did you approach it?

A: I would start by understanding the business question and the data available. Then, I would clean and preprocess the data, perform exploratory data analysis to identify patterns and outliers, and use appropriate statistical or machine learning methods to derive insights. Finally, I would communicate the findings to stakeholders.

- Q: How do you handle conflicting data or results?

A: I would first try to understand the source of the conflict. It could be due to data quality issues, different methodologies, or sampling errors. I would then validate the data, re-run the analysis, and consult with colleagues to resolve the conflict.

- Q: Describe a time when you had to work with a difficult team member. How did you handle it?

A: I would try to understand their perspective and find common ground. Open communication and setting clear expectations can help resolve conflicts. If the issue persists, involving a manager or HR might be necessary.

- Q: How do you explain complex data insights to non-technical stakeholders?

A: I would use simple language, avoid jargon, and focus on the key takeaways. Visualizations like charts and graphs can help illustrate the points. It's also important to relate the insights to business objectives and potential actions.

Case studies are a great way to demonstrate your analytical skills. Here are some examples:

- Q: You are given a dataset of retail sales. How would you analyze it to identify trends and opportunities?

A: I would start by cleaning the data and handling any missing values. Then, I would perform exploratory data analysis to identify trends, seasonality, and outliers. I would use statistical methods to identify significant variables and build predictive models to forecast future sales. Finally, I would visualize the findings and present them to stakeholders.

- Q: How would you segment customers based on their purchasing behavior?

A: I would use clustering algorithms like K-means or hierarchical clustering to group customers based on their purchasing behavior. I would then analyze the clusters to identify common characteristics and develop targeted marketing strategies for each segment.

Preparing for a data analyst interview in 2025 requires a mix of technical skills, business acumen, and strong communication. By focusing on these 66 data analyst interview questions and answers , you'll be well-equipped to tackle any challenge that comes your way. Remember to stay calm, think through your answers, and show your enthusiasm for data analysis.

Good luck with your interview! If you have any questions or need further clarification, feel free to reach out. I'm always here to help.

A: The most important skills for a data analyst in 2025 include proficiency in SQL, statistical analysis, data visualization, programming (Python/R), and business acumen.

A: You can improve your data visualization skills by practicing with tools like Tableau, Power BI, and Python libraries like Matplotlib and Seaborn. Focus on creating clear, intuitive visualizations that effectively communicate insights.

A: The best way to prepare for a data analyst interview is to practice common interview questions, work on case studies, and brush up on your technical skills. Also, understand the company and the role to tailor your answers effectively.

A: Domain knowledge is crucial in data analysis as it helps you understand the business context, ask the right questions, and derive actionable insights from the data.

- Top Business Analyst Interview Questions and Answers for 2025

- Top 110+ DevOps Interview Questions and Answers for 2025

- 130 Must-Know AWS Interview Questions and Answers [2025]

@article{66-data-analyst-interview-questions-answers-for-2025, title = {Ace Your 2025 Data Analyst Interview: 66 Questions and Answers}, author = {Toxigon}, year = {2024}, journal = {Toxigon Blog}, url = {https://toxigon.com/66-data-analyst-interview-questions-answers-for-2025} }

Related Articles

Top 20 Data Analysis Interview Questions & Answers

Master your responses to Data Analysis related interview questions with our example questions and answers. Boost your chances of landing the job by learning how to effectively communicate your Data Analysis capabilities.

Data is the lifeblood of modern business, and as a data analyst, you are the interpreter and gatekeeper of this valuable resource. Your ability to extract meaningful insights from complex datasets can drive strategic decisions and offer competitive advantages to any organization. As such, interviews for data analysis roles are designed not only to test your technical skills but also to gauge your analytical thinking, problem-solving abilities, and communication prowess.

Whether you’re an experienced data maestro or a newcomer with a fresh perspective ready to dive into the data pool, preparing for your interview is key. In this article, we’ll delve into some commonly asked questions in data analysis interviews, providing guidance on how to approach these queries with well-structured responses that demonstrate your expertise and passion for the field.

Common Data Analysis Interview Questions

1. how do you validate the quality of your data sources.

Meticulous scrutiny of data provenance, accuracy, and completeness is crucial for a data analyst to ensure reliable outputs. This question helps gauge an analyst’s competency in establishing the credibility of data, which is foundational to drawing meaningful conclusions and supporting business decisions. It reveals the thought process behind data selection, the ability to identify potential biases or errors, and the strategies implemented to maintain data integrity throughout the analytical lifecycle.

When responding, begin by outlining your approach to assessing data sources, which could include checking for data source reputation, data collection methods, and cross-referencing with other reliable datasets. Discuss the use of statistical methods to spot anomalies or inconsistencies, and mention any specific tools or techniques you employ for data cleaning and validation. Emphasize your proactive stance on continuously monitoring data quality, and share an example where your attention to detail in validating data quality significantly impacted the outcome of a project.

Example: “ In validating the quality of data sources, I start by conducting a thorough assessment of the data’s provenance, examining the reputation and credibility of the source, and understanding the methodologies used in data collection. This is complemented by cross-referencing the data with other authoritative datasets to check for consistency and reliability. I employ statistical techniques, such as outlier detection and hypothesis testing, to identify anomalies that may indicate data quality issues.

For data cleaning and validation, I utilize robust tools like SQL for data querying, Python for scripting custom validation rules, and specialized software such as Tableau or R for visual data exploration to spot inconsistencies. An example of the efficacy of this approach was when I identified a subtle but systematic error in a dataset that, once corrected, revealed a trend that was not initially apparent. This discovery led to a strategic decision that resulted in a 15% increase in operational efficiency for the project at hand. My commitment to rigorous data validation ensures that analyses and subsequent decisions are based on the most accurate and reliable information available.”

2. Describe a time when you had to analyze and interpret complex datasets with minimal guidance.

Showcasing an analyst’s proficiency in critical thinking, independence, and problem-solving is essential when faced with minimal guidance. This capability reflects an analyst’s aptitude for autonomy, resourcefulness, and their potential to contribute to the company’s strategic goals without the need for extensive hand-holding or supervision.

When responding, it’s beneficial to recount a specific project where you successfully navigated through a challenging dataset. Detail the steps you took to understand the data, the tools or methods you employed to analyze it, and how you ensured the reliability of your conclusions. Highlight your thought process and the techniques you used to manage and prioritize tasks. Your answer should demonstrate your analytical skills, your ability to work independently, and how your insights provided value to a past employer or project.

Example: “ In one instance, I was tasked with analyzing a complex dataset that contained a mix of structured and unstructured data. The dataset had multiple variables with missing values and inconsistencies that needed to be addressed before any meaningful analysis could be conducted. I began by performing an exploratory data analysis using Python, employing libraries such as Pandas and NumPy to clean and preprocess the data. This involved handling missing data through imputation methods and normalizing the data to ensure consistency across different scales.

Once the dataset was prepared, I used a combination of statistical methods and machine learning algorithms to uncover patterns and insights. Specifically, I applied principal component analysis (PCA) to reduce dimensionality and identify the most important features. I then used a Random Forest classifier to model the relationships within the data, as it is robust to overfitting and can handle a large number of features effectively. To validate the reliability of my findings, I implemented cross-validation techniques and examined the feature importances to ensure the model’s interpretability.

The insights derived from this analysis were instrumental in guiding strategic decisions, as they highlighted key drivers of the underlying phenomena. My approach not only provided a clear understanding of the complex dataset but also ensured that the conclusions drawn were statistically sound and actionable.”

3. What methods do you use for dealing with missing or corrupted data in a dataset?

An analyst’s approach to rectifying missing or corrupted data can significantly impact the outcomes of their analysis. This question targets the candidate’s problem-solving skills, their understanding of data quality, and their knowledge of specific techniques or tools that can be applied to ensure the robustness of their analysis. It also reveals their ability to maintain data integrity without compromising the dataset’s authenticity.

When responding, candidates should outline a systematic approach, starting with identifying the scope and impact of missing or corrupted data. They should discuss the use of software tools or programming techniques for detection and mention industry-standard practices like data imputation, filtering out, or using algorithms to reconstruct missing values. Candidates might also address the importance of understanding the nature of the dataset and the context in which it’s used to decide the best course of action. It’s crucial to communicate a balance between technical proficiency and a strategic mindset in preserving the dataset’s usefulness while acknowledging the limitations introduced by such imperfections.

Example: “ In dealing with missing or corrupted data, my initial step is to conduct a thorough exploratory data analysis to assess the extent and pattern of the missingness. If the data is missing completely at random, it may be appropriate to employ listwise or pairwise deletion, depending on the analysis’s requirements and the missing data’s proportion. However, for more systematic missingness, I prefer advanced imputation techniques such as multiple imputation or model-based methods like K-nearest neighbors (KNN) or Expectation-Maximization (EM) algorithms, which can preserve the underlying data structure and provide more reliable estimates.

For corrupted data, I apply robust data validation rules and anomaly detection algorithms to identify outliers or inconsistencies. Once identified, I decide whether to correct the errors, if possible, or to exclude the corrupted entries, always considering the impact on the dataset’s integrity and the analysis’s validity. In all cases, I ensure that the chosen method aligns with the data’s nature and the research question at hand, documenting the assumptions and potential biases introduced by the handling of missing or corrupted data. This systematic approach ensures the quality and reliability of the subsequent analysis, maintaining the balance between data integrity and the practicality of the dataset’s application.”

4. In what ways have you leveraged predictive modeling to inform business decisions?

Interpreting data to predict trends, behaviors, and outcomes is a key aspect of data analysis that informs strategic business decisions. This question allows candidates to demonstrate their ability to use historical data to forecast future events and trends, a valuable skill that can give businesses a competitive edge.

When responding to this question, be specific about the models you’ve used, such as regression analysis, time series analysis, or machine learning algorithms. Discuss a particular project where your predictive analysis led to a business decision. Quantify the results if possible, such as by mentioning how your model improved sales forecasts by a certain percentage or reduced costs through better inventory management. This will showcase not only your technical skills but also your understanding of how those skills can directly benefit the business.

Example: “ In leveraging predictive modeling, I’ve utilized a combination of regression analysis and machine learning algorithms to optimize inventory management for a retail chain. By analyzing historical sales data, seasonal trends, and promotional impacts, I developed a model that forecasted product demand with increased accuracy. This model was integrated into the supply chain management system, leading to a reduction in stockouts by 15% and overstock by 25%, which in turn decreased inventory holding costs and improved cash flow.

On another occasion, I employed time series analysis to refine sales forecasts for a new product launch. By incorporating external variables such as market trends and competitor actions, the model provided insights that adjusted marketing spend and distribution strategies. This resulted in a 20% higher sales volume than initially projected in the first quarter post-launch, demonstrating the model’s efficacy in informing and guiding strategic business decisions.”

5. Detail an experience where you significantly improved data collection processes.

Proactive identification of bottlenecks or inaccuracies in data collection can streamline analysis, leading to more reliable insights and better-informed decisions. This question sifts out those who can elevate the data’s integrity and the speed of its acquisition, which directly impacts a company’s ability to respond to market trends and internal challenges.

When responding, highlight a specific instance where you noticed a gap or inefficiency in the data collection process. Describe the steps you took to analyze the issue, the solution you implemented, and the positive outcomes that resulted, such as time saved, increased accuracy, or enhanced data usability. Use metrics to quantify the improvement if possible. This will show your problem-solving skills, your initiative, and your impact on the organization’s data-driven decision-making process.

Example: “ In a recent project, I identified a bottleneck in the data collection process where manual entry was leading to both delays and inaccuracies. By conducting a thorough analysis of the existing workflow, I pinpointed the root cause: the reliance on disparate systems that didn’t communicate effectively. To address this, I spearheaded the integration of an automated data ingestion tool that interfaced seamlessly with our existing databases and external data sources.

This automation reduced manual data entry by 75%, significantly diminishing the error rate and freeing up analyst time for more complex tasks. Moreover, it enabled real-time data collection, enhancing the timeliness of the insights we could derive. As a result, the organization saw a 20% increase in operational efficiency and a marked improvement in the quality of data-driven decisions.”

6. Which data visualization tools are you most proficient in, and why do you prefer them?

Translating analytical findings into clear visual representations is a skill that is highly sought after. The tools a candidate is proficient in can reveal their technical acumen, familiarity with current industry standards, and ability to adapt to the specific needs of a project or organization.

When responding to this question, it’s crucial to be specific about your experience with each tool mentioned. Offer a balanced view by discussing the strengths of your preferred tools and how they’ve enabled you to deliver compelling data-driven stories. Articulate the reasons behind your preferences, such as ease of use, advanced features, integration capabilities, or the ability to handle large datasets. If possible, describe a scenario where you successfully utilized these tools to solve a problem or provide insights that benefited your team or project.

Example: “ I am most proficient in Tableau, Python’s data visualization libraries like Matplotlib and Seaborn, and R’s ggplot2. My preference for Tableau stems from its intuitive interface and robust data handling capabilities, which allow for quick iteration and exploration of large datasets. The drag-and-drop functionality and the ability to create interactive dashboards make it an excellent tool for storytelling with data, enabling stakeholders to engage with the visualizations directly.

Python’s Matplotlib and Seaborn are my go-to libraries when I need to create custom visualizations or when I’m working within a Python-heavy data analysis pipeline. The flexibility to tailor plots and the integration with Pandas for data manipulation streamline the workflow significantly. For statistical graphics, I lean on ggplot2 within R due to its layer-based approach, which is particularly powerful for creating complex, multi-faceted plots that adhere to the principles of tidy data.

In one instance, using Tableau, I was able to develop a dashboard that synthesized various data sources into a coherent narrative, revealing key performance trends and outliers. This visualization not only facilitated a deeper understanding of the underlying data but also drove strategic decisions that improved operational efficiency.”

7. Outline your approach to conducting A/B testing on a new feature’s performance.

A/B testing is a fundamental tool for a data analyst to measure the performance impact of new features. This question discerns whether candidates understand the scientific method as it applies to the business context and if they can interpret the data to make informed recommendations.

When responding, outline a structured process starting with the establishment of a clear hypothesis. Proceed to describe how you would segment the audience to ensure a representative sample and control for external factors. Explain the importance of selecting the right metrics to measure success, how you would set up the test ensuring minimal disruption to users, and how long you would run it to achieve statistical significance. Finally, illustrate how you would analyze the results, draw conclusions, and communicate findings and recommendations to stakeholders, demonstrating an understanding that A/B testing is not just a task, but a strategic tool for improvement.

Example: “ In conducting A/B testing for a new feature’s performance, I would begin by formulating a clear hypothesis based on expected outcomes and how the feature is theorized to impact user behavior. This hypothesis would be specific, measurable, attainable, relevant, and time-bound (SMART).

Next, I would segment the audience, ensuring that both the control and treatment groups are representative of the overall population. This segmentation would account for variables such as user demographics, behavior, and device usage to minimize bias and control for external factors. The selection of appropriate metrics is critical; these would be tied directly to the hypothesis and could include conversion rates, engagement metrics, or revenue impact, depending on the feature’s intended effect.

I would then set up the test to ensure minimal user disruption, using feature flags or a similar mechanism to seamlessly allocate users to their respective groups. The test would run long enough to collect sufficient data to reach statistical significance, taking into account the expected variability in the metrics and the average traffic volume.

Upon concluding the test, I would analyze the results using appropriate statistical methods, such as t-tests or chi-squared tests, to validate the significance of the observed differences. I would synthesize these findings into actionable insights, clearly communicating the implications to stakeholders and recommending whether to roll out the feature, iterate on it, or discard it based on the evidence gathered. This approach underscores A/B testing as a strategic tool for data-driven decision-making and continuous improvement.”

8. Share an example of how you’ve used statistical analysis to solve a real-world problem.

Translating technical skills into tangible outcomes that positively impact the business is a critical aspect of data analysis. This question tests the candidate’s proficiency in applying statistical methods to real-world scenarios, demonstrating their capacity to add value and drive insights that enable data-driven decision-making.

When responding, it’s essential to choose an example that showcases a clear problem, the statistical methods used, and the resulting action or decision that was informed by the analysis. Walk through the steps taken to gather and clean the data, the selection of appropriate statistical tools or models, the analysis process, and how the findings were communicated to stakeholders. It’s important to emphasize the impact of the analysis, such as cost savings, revenue generation, process improvements, or other business benefits. Be prepared to discuss any challenges faced during the analysis and how they were overcome, as this can further illustrate problem-solving skills and adaptability.

Example: “ In a recent project, I was tasked with optimizing the inventory management of a retail chain to reduce excess stock and minimize stockouts. The problem was twofold: overstocking was tying up capital and increasing storage costs, while stockouts were leading to missed sales opportunities and customer dissatisfaction.

To address this, I first consolidated historical sales data, inventory levels, and supplier lead times, ensuring the data was clean and structured for analysis. I then applied time series forecasting methods, specifically ARIMA models, to predict future sales patterns. This analysis revealed seasonal trends and product-specific demand cycles. By integrating these insights with an inventory optimization algorithm, I was able to recommend a dynamic reordering strategy that adjusted stock levels in real-time based on the forecasted demand.

The implementation of this data-driven approach resulted in a 15% reduction in inventory costs and a 20% decrease in stockouts within the first quarter post-implementation. The success of the project was communicated to stakeholders through a detailed report that highlighted the statistical methods used, the rationale behind the model selection, and the quantifiable business impacts. This not only demonstrated the value of the analysis but also helped in securing buy-in for adopting similar strategies across other product lines.”

9. When is it appropriate to use qualitative data over quantitative data in analysis?

Qualitative data is rich in detail and context, providing insights where numerical analysis falls short. It is particularly useful in understanding the ‘why’ and ‘how’ behind patterns observed in quantitative data, in user experience research, and when dealing with complex issues that require a more narrative form of analysis.

When crafting your response, consider highlighting your experience with using qualitative data to complement quantitative findings, or to provide insights where numbers alone were insufficient. Discuss specific scenarios or projects where qualitative analysis was the best approach, such as developing user personas, performing content analysis, or conducting interviews and focus groups. Demonstrate your ability to discern when a narrative or thematic understanding of the data is necessary to inform decision-making or to grasp the full implications of a problem or solution.

Example: “ In data analysis, qualitative data is particularly valuable when the context and depth of understanding are crucial to interpreting the results. For instance, while quantitative data might tell us that customer satisfaction scores have dropped, qualitative data from customer interviews or free-form survey responses can provide the ‘why’ behind these numbers, revealing the underlying causes and nuances of customer discontent. This deeper insight is essential for developing targeted strategies for improvement.

I’ve leveraged qualitative data effectively during content analysis projects where the goal was to understand the sentiment and themes within customer feedback. By coding and interpreting textual data, I was able to identify patterns and sentiments that quantitative data alone could not have uncovered. This approach was instrumental in refining marketing messages and aligning product features with customer needs. Qualitative data shines in its ability to capture the richness of human experience, which is often lost in purely numerical analysis. It is the key to unlocking the stories behind the data, which in turn can lead to more empathetic and effective decision-making.”

10. What challenges have you faced while integrating data from multiple disparate systems?

Integrating information from various sources is pivotal for providing comprehensive insights and supporting data-driven decision-making. This question assesses the candidate’s experience with the complexity of data ecosystems and their problem-solving skills, as well as their familiarity with tools and methodologies for data reconciliation and transformation.

When responding, focus on specific examples of past projects where you faced such integration challenges. Outline the strategies you employed to address data inconsistencies, your approach to ensuring data quality, and any collaboration with cross-functional teams. Mention the tools and technologies you used, like ETL processes, data warehousing solutions, or specific software like SQL or Python libraries, and highlight the successful outcomes and learnings from the experience.

Example: “ Integrating data from multiple disparate systems often presents challenges in terms of data inconsistency, varying data formats, and differing schemas. In one project, I encountered significant discrepancies in customer data collected from an e-commerce platform and a physical retail system. The key to resolving these issues was a meticulous ETL process, where I used SQL for data extraction and transformation. During transformation, I implemented data cleaning techniques, such as deduplication and normalization, to ensure data quality.

To address schema mismatches, I collaborated with the data engineering team to design a robust data warehousing solution that could accommodate data from both sources cohesively. We utilized Python’s Pandas library for exploratory data analysis to identify and reconcile differences in data representation. The successful outcome was a unified customer view that enabled more accurate sales analytics and improved customer segmentation. This experience underscored the importance of a well-thought-out data integration strategy and the necessity of cross-functional teamwork in overcoming integration challenges.”

11. How do you ensure confidentiality and ethical use of sensitive data?

Handling sensitive information with integrity and discretion is paramount in data analysis. This question determines if a candidate has a robust understanding of data privacy principles and if they can be trusted to handle data with the necessary care.

When responding to this question, candidates should articulate their familiarity with relevant data protection laws, such as GDPR or HIPAA, and any industry-specific regulations. They should discuss the practical steps they take to secure data, such as using encrypted storage, implementing access controls, and adhering to company policies on data sharing. Candidates might also mention their experience with anonymizing data for analysis and their approach to ethical decision-making when faced with potential conflicts of interest. Highlighting any certifications in data privacy or past experiences dealing with sensitive information can further demonstrate their competence in this area.

Example: “ Ensuring confidentiality and ethical use of sensitive data begins with a comprehensive understanding of data protection laws like GDPR and HIPAA, which provide a framework for handling personal information. In practice, I adhere to the principle of least privilege, ensuring that access to sensitive data is restricted to only those who require it for their specific role. This is complemented by employing robust encryption for data at rest and in transit, which safeguards against unauthorized access.

Furthermore, I routinely employ techniques such as data anonymization and pseudonymization to minimize the risk of identification from datasets used in analysis. This not only aligns with legal requirements but also with ethical standards, as it helps maintain individual privacy. In situations where data usage may present ethical dilemmas, I engage in a thorough review process, considering the potential impacts and seeking guidance from ethical frameworks and oversight committees. My commitment to ethical data handling is also evidenced by my proactive approach to staying current with evolving data privacy certifications and regulations, ensuring that my practices reflect the latest standards in data stewardship.”

12. Describe a scenario where you utilized machine learning algorithms in your data analysis.

Harnessing the power of machine learning algorithms to identify patterns and make predictions is a key evolution in data analysis. This question seeks evidence of your ability to apply these advanced techniques to real-world data sets and understand the complexities of algorithm-driven analysis.

When responding, you should outline a specific project or task where you implemented machine learning. Detail the type of algorithm used—such as decision trees, neural networks, or clustering techniques—the data you were analyzing, and the goal of the project. Explain the steps you took to prepare the data, choose the appropriate algorithm, and evaluate the model’s performance. Highlight the outcome of your analysis, what insights were gleaned, and how those insights translated into actionable decisions for the organization. It’s crucial to articulate the thought process behind selecting the machine learning approach and the impact it had, demonstrating both your technical expertise and your ability to drive results.

Example: “ In a recent project, the goal was to improve customer segmentation to tailor marketing strategies more effectively. The data comprised various customer interactions, purchase histories, and demographic information. After preprocessing the data, which included handling missing values, normalization, and feature engineering to highlight behavioral patterns, a K-means clustering algorithm was employed to segment the customer base.

The selection of K-means was driven by the need for an unsupervised method that could handle the large volume of high-dimensional data and identify natural groupings based on purchasing behavior. To determine the optimal number of clusters, the Elbow method was applied, which indicated a clear inflection point that suggested the ideal cluster count. Model evaluation was conducted by assessing the silhouette score, ensuring that the clusters were both cohesive and well-separated.

The analysis revealed distinct customer segments with unique characteristics, which allowed for more targeted marketing campaigns. The insights led to a 15% increase in campaign response rates and a 10% increase in overall customer satisfaction, demonstrating the effectiveness of the machine learning application in driving tangible business outcomes.”

13. Walk me through your process for preparing a large dataset for analysis.

The question at hand delves into the candidate’s organizational skills, attention to detail, and their methodical approach to problem-solving. It’s about discerning if the candidate can handle the volume and complexity of data, cleanse it of inaccuracies, and structure it in a way that is conducive to extracting meaningful patterns and conclusions.

When responding to this question, candidates should outline a clear, step-by-step approach that starts with initial data inspection and ends with the dataset ready for analysis. This could include discussing data cleaning techniques such as handling missing values and outliers, data transformation methods like normalization or encoding categorical variables, and data reduction strategies if applicable. It’s important to articulate how each step contributes to the overall quality of the analysis and to highlight any specific tools or software that might be used to streamline the process. Demonstrating an understanding of the importance of each phase in the preparation process will convey the candidate’s proficiency and thoroughness in handling large datasets.

Example: “ When preparing a large dataset for analysis, my initial step is to conduct a preliminary inspection to understand its structure, content, and any inherent issues such as missing values, duplicates, or inconsistent formatting. I use statistical summaries and visualizations to get an overview of the data distribution and potential outliers.

Following the inspection, I begin the cleaning phase, where I handle missing values either by imputation or removal, depending on their significance and the amount of missing data. For duplicates, I employ deduplication techniques to ensure the dataset’s integrity. In cases of categorical variables, I apply encoding methods like one-hot encoding or label encoding, tailored to the specific algorithms I plan to use later. For numerical data, I consider normalization or standardization to bring all variables to a comparable scale, especially when using distance-based algorithms.

Lastly, I assess the need for data reduction techniques such as dimensionality reduction or feature selection to improve computational efficiency and model performance. Throughout the process, I leverage tools like pandas for data manipulation, scikit-learn for preprocessing, and visualization libraries such as matplotlib or seaborn to facilitate and validate each step. This systematic approach ensures that the dataset is optimized for analysis, allowing for more accurate and insightful results.”

14. How would you explain the significance of p-values in hypothesis testing to a non-technical audience?

Demystifying complex statistical concepts and communicating them effectively to stakeholders is a vital skill for data analysts. This ability is crucial because data analysts often need to justify their findings and decisions to those who rely on their insights but do not share their technical expertise.

To respond effectively, start by avoiding statistical jargon. Instead, use a simple analogy, like comparing the p-value to evidence in a court case where you’re trying to determine if an event (the defendant being guilty) is random or not. Explain that a low p-value indicates that the evidence is strong enough to reject the assumption of innocence (or chance), while a high p-value suggests that the evidence isn’t strong enough to make that conclusion. Emphasize that while p-values are not the sole determinant of an outcome, they are a helpful indicator of whether further investigation is warranted.

Example: “ Imagine you’re a detective trying to figure out if a suspect could have just been at the wrong place at the wrong time, or if they truly had a part in a crime. The p-value is like a piece of evidence that helps us decide how suspicious the suspect’s alibi is. If the p-value is really low, it’s like having a video of the suspect committing the crime—it makes us pretty confident that the suspect’s presence wasn’t just a coincidence, and we might need to take a closer look. On the other hand, if the p-value is high, it’s as if the only evidence we have is that the suspect was in the neighborhood at some point, which doesn’t really tell us much. It doesn’t prove the suspect is innocent, but it’s not enough to act on.

So, a p-value helps us determine how much we should doubt a ‘business as usual’ scenario. It’s not a final verdict, but rather a signal. A low p-value doesn’t necessarily mean there’s a cause-and-effect relationship, just like a high p-value doesn’t prove there isn’t one. It’s a guide that tells us whether we should investigate further, not a definitive answer.”

15. In which situations have you found time-series analysis particularly useful?

Performing time-series analysis is essential for forecasting, understanding seasonal patterns, or detecting anomalies. This question ensures that candidates can apply analytical skills to real-world scenarios, which is essential for driving strategic decisions and actions.

When responding, share specific examples from your experience where time-series analysis provided critical insights that informed decision-making. Perhaps you predicted sales spikes and inventory needs for a retail company or identified cyclical trends in user sign-ups for a subscription service. Explain the impact of your analysis on the business, such as improved resource allocation, more accurate budget forecasting, or enhanced customer satisfaction. Your answer should highlight your analytical prowess, problem-solving skills, and ability to translate data into actionable business strategies.

Example: “ Time-series analysis has proven invaluable in forecasting demand for a range of products, allowing for optimized inventory management and resource allocation. For instance, by analyzing historical sales data, I was able to identify not only the expected seasonal peaks and troughs but also the subtler weekly patterns that impacted stock levels. This analysis enabled the business to adjust procurement strategies accordingly, reducing both overstock and stockouts, which in turn led to improved cash flow and customer satisfaction.

In another scenario, time-series analysis was critical in understanding user engagement trends for a digital platform. By decomposing the series into trend, seasonal, and irregular components, I uncovered an underlying growth trend that was not immediately apparent due to noise in the data. This insight was pivotal in making informed decisions about marketing spend and product development, as it highlighted the periods of highest user acquisition and retention. The strategic adjustments made as a result of this analysis significantly boosted the platform’s user base and revenue.”

16. Tell me about a project where you had to clean and organize unstructured data.

Discussing past projects, the interviewer is looking for evidence of your technical prowess, your methodical approach to problem-solving, and your diligence in ensuring data accuracy. This question also touches on your patience and attention to detail, as cleaning data can be a time-consuming process that requires a meticulous mindset.

When responding, highlight a specific project, the challenges you faced with the unstructured data, and the steps you took to clean and organize it. Discuss the tools and techniques you employed, such as scripting for automation or software for data cleaning. Emphasize the impact of your work on the project’s outcome, such as how it enabled accurate analysis, informed decision-making, or led to valuable insights. Your answer should convey your technical skills, your problem-solving abilities, and your commitment to quality in your work.

Example: “ In a recent project, I was tasked with cleaning and organizing a dataset that originated from various social media platforms. This data was extremely unstructured, with a mix of text, emojis, images, and video metadata. The primary challenge was to extract meaningful textual information and sentiment from the noise, which was crucial for our sentiment analysis model.

To tackle this, I first employed Python scripts with regular expressions to strip away irrelevant characters and normalize text data. I then used Natural Language Processing (NLP) libraries like NLTK and spaCy to parse and tokenize the text, which allowed me to filter out stop words and perform lemmatization. For the emojis, I mapped them to their corresponding sentiment scores using a predefined lexicon. To maintain data quality, I implemented a series of checks to identify and handle missing values and outliers.

The cleaned dataset not only improved the performance of our sentiment analysis model by 15% but also significantly reduced the computational resources required for processing. This efficiency gain allowed the team to iterate more rapidly on the model, leading to richer insights into consumer behavior and a more informed marketing strategy.”

17. What strategies do you employ to identify and address biases in data analysis?

Recognizing and mitigating the effects of biases in data sets is fundamental to the integrity of any conclusions drawn from that data. The ability to identify and address biases is crucial in maintaining the credibility of the data, the analyst, and the organization.

When responding to this question, a candidate should highlight their experience with various types of biases such as sampling bias, confirmation bias, or measurement bias. They should discuss their familiarity with techniques for mitigating bias, which might include using robust data collection methods, validating the data set, employing statistical methods to adjust for biases, and cross-validating results with other data sources. It’s also beneficial to mention a continuous learning approach by staying updated with the latest methodologies and tools that help in reducing bias, as well as the importance of consulting with peers or experts in the field to gain different perspectives.

Example: “ In addressing biases in data analysis, I prioritize a multi-faceted approach that begins with the design of the data collection process. To mitigate sampling bias, I ensure that the sample is representative of the population by employing stratified or random sampling techniques, depending on the context. I also incorporate redundancy in data sources when possible to cross-validate findings and identify potential biases arising from a single source.

Once data is collected, I apply statistical techniques such as regression analysis to control for confounding variables that might introduce bias. For instance, when dealing with measurement bias, I use calibration techniques and error-correcting algorithms to adjust the data. Confirmation bias is countered by rigorously testing hypotheses with null models and avoiding overfitting through techniques like cross-validation. Throughout the analysis, I maintain a critical mindset, actively seeking disconfirming evidence to challenge initial assumptions. Peer reviews and collaborative analysis sessions are integral to this process, as they bring diverse perspectives that can further uncover and correct for biases that might not be apparent from a single analyst’s viewpoint.”

18. How do you determine the right sample size for your analyses?

Choosing the right sample size in data analysis is a balancing act that ensures results are representative of the larger population, minimizes errors, and supports credible conclusions. This question targets the candidate’s understanding of statistical principles and their ability to apply them in real-world scenarios.

When responding, you should outline your approach to determining sample size, which might include considerations of the population size, the margin of error you’re willing to accept, the confidence level you desire, and the expected effect size. You could mention specific statistical formulas, tools, or software you use to calculate sample size and describe how you factor in practical considerations like resource limitations. It’s also beneficial to illustrate your explanation with examples from past projects where you successfully determined an appropriate sample size.

Example: “ Determining the right sample size is critical to ensure the validity and reliability of the analysis. I start by defining the statistical power I aim to achieve, typically 80% or higher, to detect a meaningful effect size. The effect size is informed by prior research or a preliminary analysis, which provides an estimate of the minimum difference or correlation that is practically significant for the study’s context.

I then consider the desired confidence level, commonly set at 95%, and the acceptable margin of error, which is a balance between statistical precision and resource constraints. To calculate the sample size, I use the Cochran formula for categorical data or the formula derived from the central limit theorem for continuous data, adjusting for population size if the population is finite and small enough that sampling without replacement affects the variance.

In practice, I also factor in the potential for non-response or dropout rates, which may require oversampling initially to ensure the final sample meets the size requirements. For instance, in a recent project analyzing customer satisfaction, I anticipated a 10% non-response rate, which I accounted for in the initial sample size calculation to maintain the power of the subsequent analysis. Using software like G*Power or statistical packages within R or Python, I can input these parameters to produce a sample size that is both statistically sound and feasible within the project’s constraints.”

19. Can you provide an instance where you used geospatial data to enhance insights?

Taking advantage of the locational aspect of data to unravel complexities is a skill that employers value. This question assesses a candidate’s experience in leveraging geospatial data to provide a more nuanced understanding of the issue at hand.

When responding, it’s important to outline a specific scenario where geospatial data was pivotal. Explain the project’s objectives, how you sourced and integrated geospatial data, the tools and techniques used for analysis, and most crucially, the enhanced insights gained. Be sure to highlight the impact of these insights on the project’s outcome or the decision-making process, demonstrating your ability to transform raw data into actionable intelligence.

Example: “ In a recent project, the objective was to optimize the distribution network for a retail chain. We sourced geospatial data including demographic information, traffic patterns, and competitor store locations. I integrated this data with the company’s internal sales and inventory data using QGIS for spatial analysis and Python for data manipulation and analysis.

By applying spatial autocorrelation techniques and hotspot analysis, we identified areas with high sales potential but inadequate service coverage. The insights allowed us to propose strategically located new stores and realign the distribution routes to minimize transit times and costs. The implementation of these recommendations resulted in a 15% reduction in logistics expenses and a noticeable increase in market penetration in under-served regions. This project showcased the power of geospatial data in unveiling patterns not immediately apparent through traditional data analysis methods.”

20. What steps do you take to stay updated with emerging trends and technologies in data analysis?

Staying current in data analysis is about maintaining a competitive edge and being able to provide the most efficient and insightful analysis possible. This question reflects the need for analysts who are self-motivated to learn and adapt in a dynamic field where stagnation can mean obsolescence.

When responding, highlight your proactive learning strategies, such as following key thought leaders on social media, subscribing to industry newsletters, participating in webinars, attending conferences, and taking online courses or certifications. Explain how you integrate new knowledge into your work, perhaps by experimenting with new tools on smaller projects or by sharing insights with your team to foster a culture of continuous learning and improvement. Show that your approach to staying informed is systematic and woven into your daily professional life, demonstrating both discipline and a genuine passion for the field of data analysis.

Example: “ To stay at the forefront of data analysis, I maintain a disciplined approach to continuous learning. I regularly follow industry thought leaders on platforms like LinkedIn and Twitter, ensuring I’m exposed to diverse perspectives and the latest discussions. Additionally, I subscribe to several key newsletters and journals such as the Harvard Business Review’s Analytics series and the Data Science Central updates, which provide curated content on cutting-edge methodologies and case studies.

I also prioritize ongoing education through MOOCs from institutions like Coursera and edX, focusing on courses that cover emerging technologies and advanced analytical techniques. This allows me to not only learn new theories but also to apply them in practical scenarios. Moreover, I attend webinars and conferences, which are excellent for networking and gaining insights from real-world applications of new tools and strategies. By integrating these new skills and tools into smaller scale projects, I can assess their efficacy and potential impact on larger initiatives. This practice of experimentation and sharing findings with my team fosters a collaborative environment of innovation and continuous improvement within our data analysis processes.”

Top 20 Industrial Safety Interview Questions & Answers

Top 20 brainstorming interview questions & answers, you may also be interested in..., top 20 banking interview questions & answers, top 20 social studies interview questions & answers, top 20 retail leadership interview questions & answers, top 20 irrigation interview questions & answers.

Tutorial Playlist

Data analytics tutorial, what is data analytics and its future scope, data analytics with python, exploratory data analysis, top 5 business intelligence tools, qualitative vs. quantitative research, how to become a data analyst, data analyst vs. data scientist, data analyst interview questions and answers, confidence interval in statistics, applications of data analytics: real-world applications and impact, the best spotify data analysis project, 66 data analyst interview questions to ace your interview.

Lesson 8 of 11 By Shruti M

Table of Contents

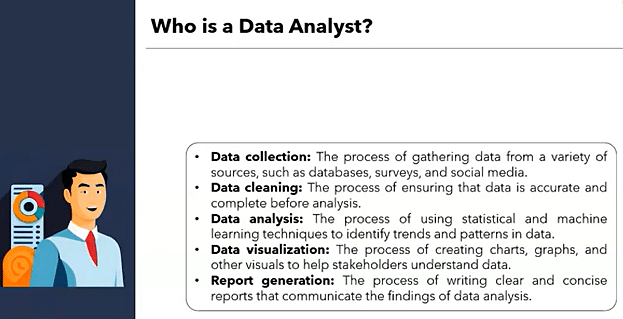

Data analytics is widely used in every sector in the 21st century. A career in the field of data analytics is highly lucrative in today's times, with its career potential increasing by the day. Out of the many job roles in this field, a data analyst's job role is widely popular globally. A data analyst collects and processes data; he/she analyzes large datasets to derive meaningful insights from raw data.

If you have plans to apply for a data analyst's post, then there are a set of data analyst interview questions that you have to be prepared for. In this article, you will be acquainted with the top data analyst interview questions, which will guide you in your interview process. So, let’s start with our generic data analyst interview questions.

Your Data Analytics Career is Around The Corner!

General Data Analyst Interview Questions

In an interview, these questions are more likely to appear early in the process and cover data analysis at a high level.

1. Mention the differences between Data Mining and Data Profiling?

2. define the term 'data wrangling in data analytics..

Data Wrangling is the process wherein raw data is cleaned, structured, and enriched into a desired usable format for better decision making. It involves discovering, structuring, cleaning, enriching, validating, and analyzing data. This process can turn and map out large amounts of data extracted from various sources into a more useful format. Techniques such as merging, grouping, concatenating, joining, and sorting are used to analyze the data. Thereafter it gets ready to be used with another dataset.

3. What are the various steps involved in any analytics project?

This is one of the most basic data analyst interview questions. The various steps involved in any common analytics projects are as follows:

Understanding the Problem

Understand the business problem, define the organizational goals, and plan for a lucrative solution.

Collecting Data

Gather the right data from various sources and other information based on your priorities.

Cleaning Data

Clean the data to remove unwanted, redundant, and missing values, and make it ready for analysis.

Exploring and Analyzing Data

Use data visualization and business intelligence tools , data mining techniques, and predictive modeling to analyze data.

Interpreting the Results

Interpret the results to find out hidden patterns, future trends, and gain insights.

4. What are the common problems that data analysts encounter during analysis?

The common problems steps involved in any analytics project are:

- Handling duplicate

- Collecting the meaningful right data and the right time

- Handling data purging and storage problems

- Making data secure and dealing with compliance issues

5. Which are the technical tools that you have used for analysis and presentation purposes?

As a data analyst , you are expected to know the tools mentioned below for analysis and presentation purposes. Some of the popular tools you should know are:

MS SQL Server, MySQL

For working with data stored in relational databases.

MS Excel, Tableau

For creating reports and dashboards.

Python, R, SPSS

For statistical analysis, data modeling, and exploratory analysis .

MS PowerPoint

For presentation, displaying the final results and important conclusions.

6. What are the best methods for data cleaning?

- Create a data cleaning plan by understanding where the common errors take place and keep all the communications open.

- Before working with the data, identify and remove the duplicates. This will lead to an easy and effective data analysis process .

- Focus on the accuracy of the data. Set cross-field validation, maintain the value types of data, and provide mandatory constraints.

- Normalize the data at the entry point so that it is less chaotic. You will be able to ensure that all information is standardized, leading to fewer errors on entry.

7. What is the significance of Exploratory Data Analysis (EDA)?

- Exploratory data analysis (EDA) helps to understand the data better.

- It helps you obtain confidence in your data to a point where you’re ready to engage a machine learning algorithm.

- It allows you to refine your selection of feature variables that will be used later for model building.